Taking a turn in my career

I spent the last 30-ish years of my professional life working on what I generally refer to as "compute systems abstractions". I started in 1994 at IBM working on "the PC" (Personal Computer) almost by chance and I specialized, over the years, on physical servers, operating systems, hardware virtualization, containers and functions.

I can pin almost all these transitions to "aha moment" I had. For example, I vividly remember when, in October 2001, I met the lead IBM xSeries 440 engineer in Kirkland (WA) and he told me:

"no one wants this server (which has 16 CPUs), it's just too big, it's useless. We are talking to this company called VMware that is building this software hypervisor that would allow a customer to chunk the 440 into smaller Windows servers. Here is the (ESX 1.1) CD-ROM, go try it out and let me know what you think".

I do remember walking out chuckling and thinking this would never work ("a software hypervisor that sits between the server and the OS? Bah!"). Until I tried it in the lab the day after and thought "OMG, this thing is going to change the x86 world as we know it!". Something similar happened when I transitioned my focus from VMs to containers.

A few months ago, I had another "aha moment" and, for the first time, it was not associated to a compute abstraction. I was rebasing this blog from WordPress to a more modern S3/CloudFront serverless deployment when I found myself in the need to write a CloudFront function that removed www if my blog was reached via www.it20.info. My development journey typically starts with a Google search, it often lands on one or more GitHub repositories where I get inspired by an example (often multiple pieces of examples) and ultimately, after lots of trials and errors, I build my own code (slowly) based on those sparse findings. For this task, not having found a ready to use example, I was already budgeting a few hours of work (no shaming please). Then I figured I'd try one of these "GenAI" tools everybody was talking about and I asked something to the extent of write a CloudFront function that generates a 302 redirect and strips out the "www" part of the hostname of the website, if it exists (I didn't take note the exact prompt I have used). And boom:

1function handler(event) {

2 var request = event.request;

3 var headers = request.headers;

4 var host = request.headers.host.value;

5 var uri = request.uri

6

7 if (host.startsWith('www.')) {

8 var host = host.replace(/^www\./, '');

9 var response = {

10 statusCode: 302,

11 statusDescription: 'Found',

12 headers:

13 { "location": { "value": "https://"+ host + uri } }

14 };

15 return response

16 }

17

18 return request;

19}

In less than 30 seconds I had a fully working CloudFront function ready to use. I found myself thinking (again): "This thing is going to change the world. And this time, drastically."

As I dove deeper into this topic trying to read and explore more, it became clear to me this had the potential to change our (professional) lives way more than an incremental compute abstraction (which I think of as "geological periods"). This transition has the potential to affect the industry in a way that is similar to the transition from the mainframe to the PC or the transition from on-prem to the cloud (which I think of as "geological eras").

But let's talk more about these geological eras.

The transition from mainframes to PCs (and x86 systems in general) has democratized access to computers, increasing the segment of the population that could access IT for professional (and personal) use:

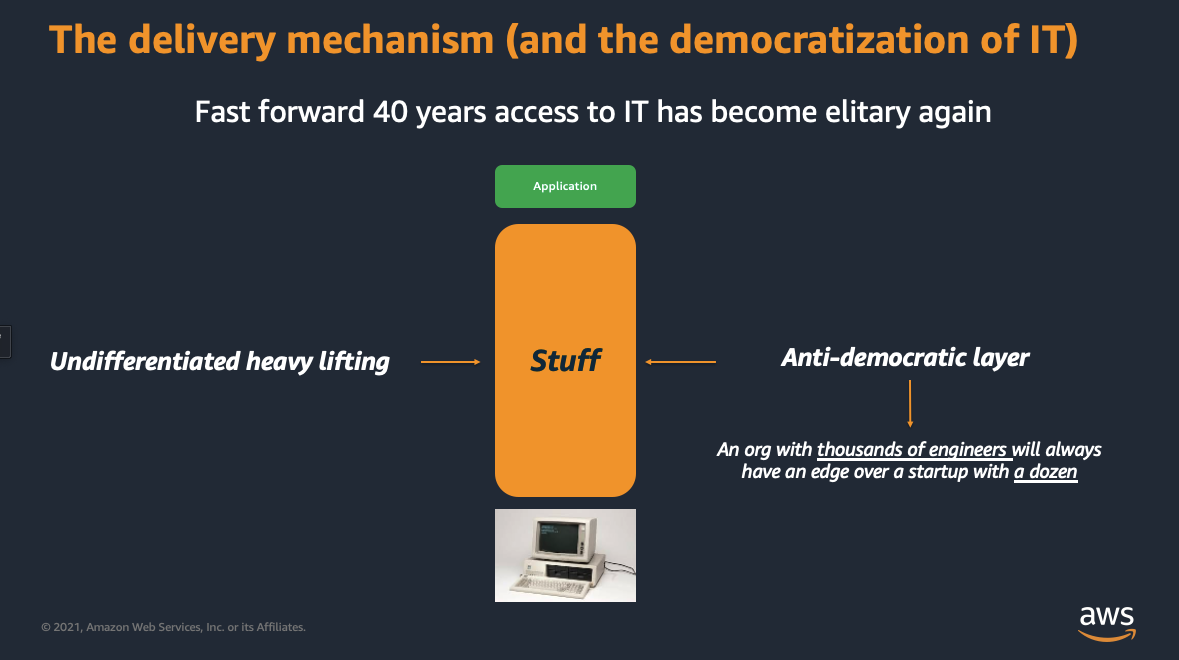

While many think of cloud in the same vein ("cloud is just someone else's computer that you can rent hourly"), I think this view is anchored very much in early days of cloud. I like to think of cloud more in the context of democratizing access to complex software stacks. In fact, as computers started to become more broadly used, the gating started to happen on the complex and rich software stack you could run on them (and the skills it required to run it). You really needed to be (or employ) an expert to be able to effectively use them at their fullest. Cloud managed services have, once again, democratized access to IT allowing, for example, lean startups to compete with large established organizations (or simply allowing large established organizations to remove un-differentiating heavy lifting and focus on their specific business requirements):

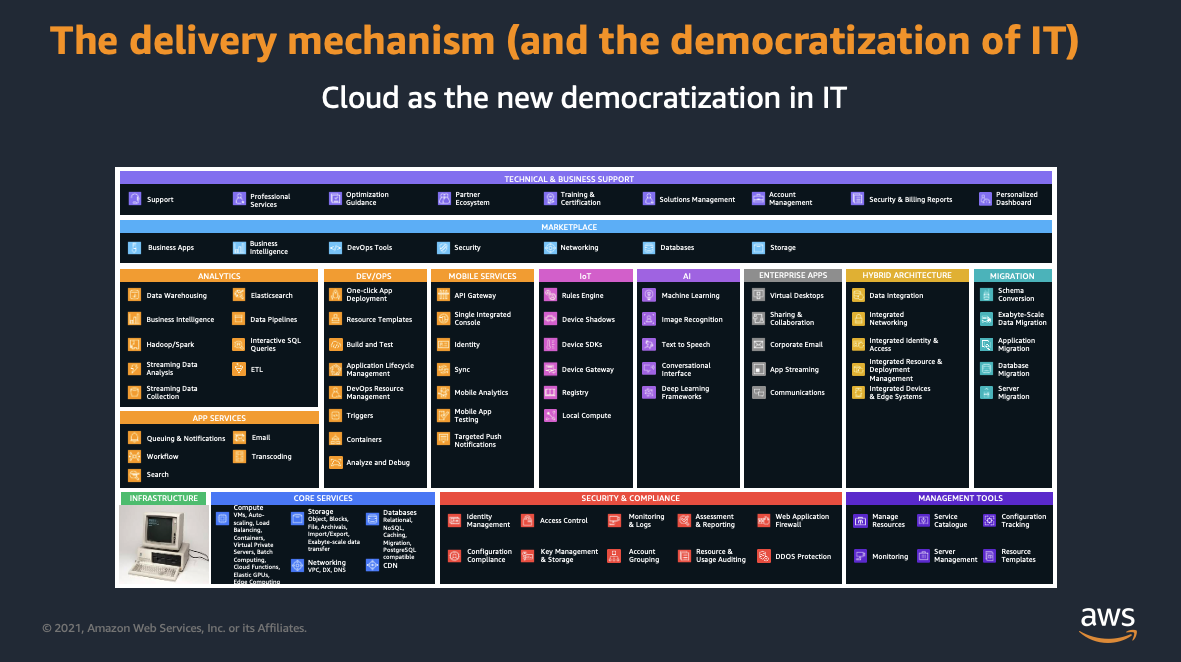

Over the years all major cloud providers announced a large set of managed services that would allow to fill the Stuff portion of that stack so that developers could focus on getting their job done (i.e., generating business value):

Interestingly enough, while solving for these democratization opportunities, cloud providers created more friction in other areas of the builder journey. For example, how do you navigate through the richness of services AWS offers and how do you consume them effectively, efficiently and following best practices? In retrospect, and without realizing the bigger picture at the time, this is the same problem I had when I on-boarded to AWS as a Solutions Architect in 2017. I have documented the first 6 months milestone of my journey in this 5 years old blog post. This is an excerpt from that blog that matters for this discussion, and it's where I have documented my personal challenges back then:

[ from: https://it20.info/2018/05/my-first-6-months-at-aws/ ]

- You get to know all of the AWS services. Before joining AWS I thought this was the most difficult challenge. After joining AWS, I figured this was the easiest part (again, relatively speaking). Don’t get me wrong, it’s a lot of work learning all the services, it’s a moving target and you will never be a guru on all of them. The challenge here is to know as much as possible of all of them.

- In many situations you have to work backwards from the customer’s needs and translate their business objectives into a meaningful architecture that can deliver the results. This isn’t so much of a problem when the need is “I have 1.674 VMs in my data centers and I would like to move them to 1.674 instances on AWS”. But it is a challenge when the need is expressed in business terms such as “I need to do predictive maintenance on my panel bender line of products” or “I want to build a 3D map of my plants to offer training without having employees come on-site”. This is quite a challenging mental task because, among the many difficulties, it requires a good understanding of the customer’s business to actually understand (or better, anticipate proactively) the use case being discussed.

- The third and possibly most difficult part is this though: once you get a good understanding of the services portfolio, once you get a good understanding of the use case, which one of the potential many combinations of services do you use to deliver the best solution to the customer? You will find out that there is an (almost) infinite way to build a solution but, in the end, there are only a handful of different combinations of services that make sense in a given situation. There are 5 dimensions that you usually need to consider when designing a solution: operation (you want the architecture to be easy to maintain and easy to evolve), security (you want the architecture to be secure), reliability (you want the architecture to be reliable and avoid single point of failures), performance (you want the architecture to be fast) and costs (you want the architecture to be as cost-effective as possible). It is not by chance that these aspects are the foundation pillars of the AWS Well Architected Framework. Finding the balance among all these aspects is key and possibly the most challenging (and interesting) task for any Solutions Architect. The other reason for which this is challenging is because it builds on top of #1 and #2: it assumes you have a good understanding of all of the services (perhaps the one you don’t know well and fail to consider is the one that would be the best suited) and it assumes you get a good understanding of the use case and the business needs of the customer.

Among the many dimensions that generative AI can have a big impact on, this to me is one of the most interesting. In the last 10+ years we have operated with the assumption that not all potential users are IT experts and (relatively) very few users have direct and dedicated access to IT experts. We are at the beginning of a new transition that will open the door to cloud computing to non-expert builders AND will make expert builders, potentially, an order of magnitude more productive.

I am very excited (and a bit sad) to transition myself out of the "compute" world and diving full time into this new industry evolution. This doesn't mean I won't touch compute anymore; ultimately, generative AI will just make it easier than ever to use compute (among other things). Having that said, my job is now going to focus more on how we build this transition at AWS. There is work in this space that we have already publicly announced such as CodeWhisperer and Bedrock. But there is also a lot of exciting work happening behind the scenes (that I cannot talk about yet, for obvious reasons).

My role, similar to what I was doing for the containers and serverless services until a few days ago, will focus on working closely with product managers and engineers to build (err, help building) these new experiences as well as working with the broad market in general to explain and evangelize about what we are doing in this space.

I am both excited and scared (because it's very much outside of my comfort zone) about this new role!

Massimo.