A framework to adopt generative AI assistants for builders

Last week I was in Istanbul for the local Community Day, where I talked about Generative AI assistants (e.g. Amazon Q Developer). One of the concepts I talked about is a framework for how to think about adopting these assistants. This framework tries to address common questions people have around the topic: are these assistants useful? can we trust them? will they replace us? and so forth.

Farrah captured a picture of my slide and I committed to write a blog to (try to) walk people through what I have in mind.

Patrick also asked for a better version of it.

So here is my attempt, broken down as I presented it.

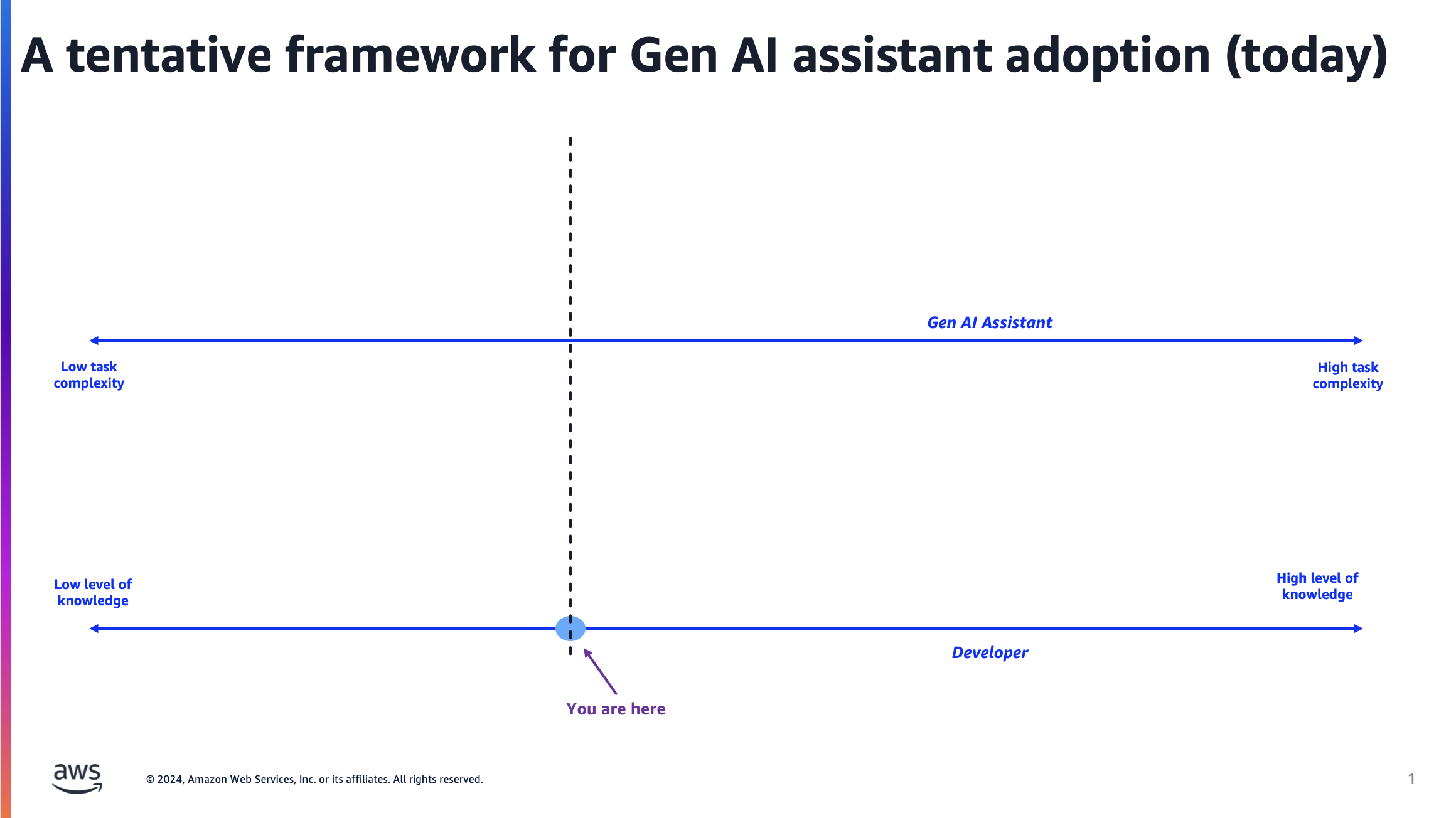

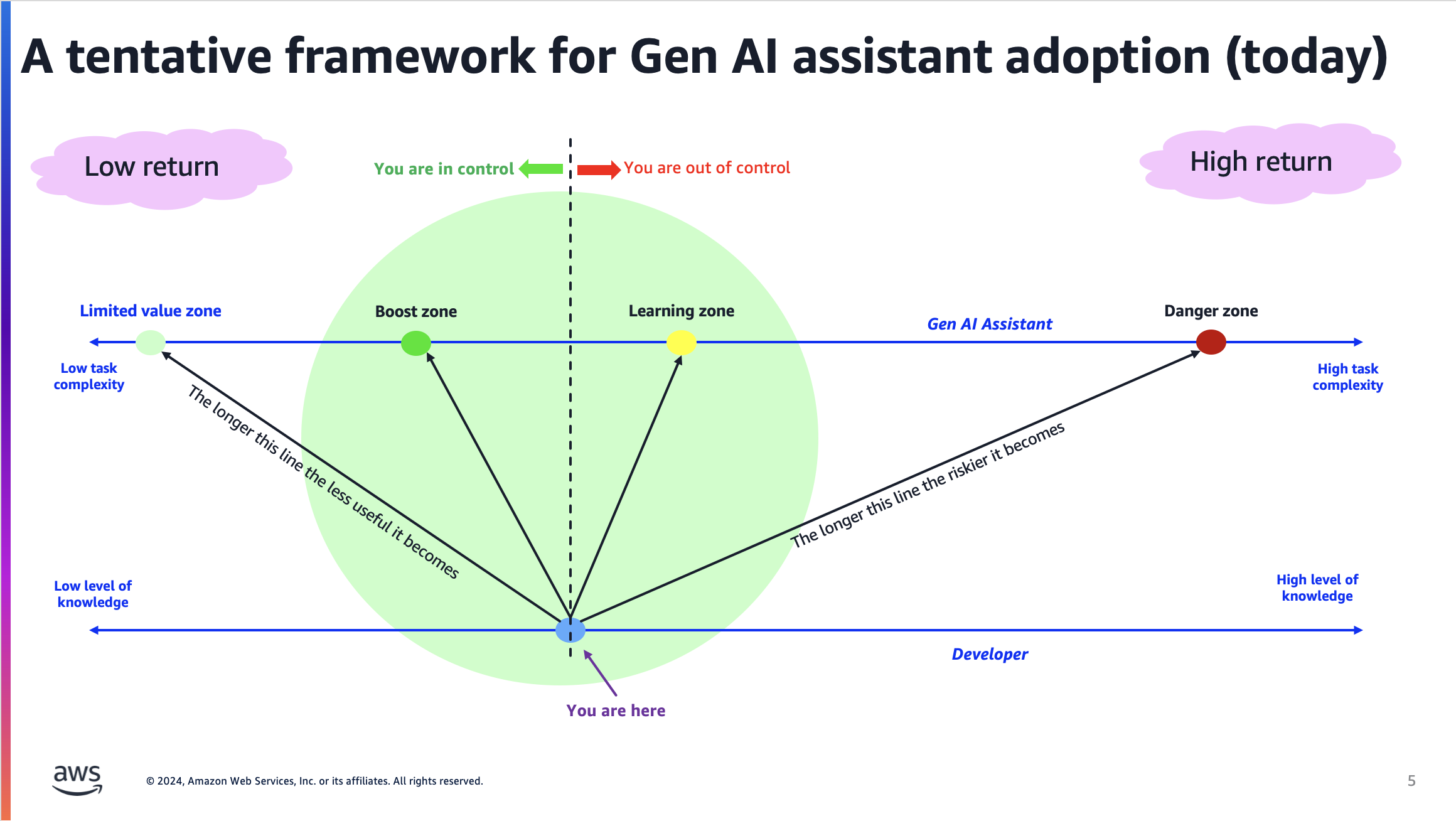

You and the assistant

It starts with two spectrums: the knowledge of the developer and the complexity of the task at stake. The latter maps to what the assistant is capable of assisting you with. Note that different assistants will have different skills and abilities; not all of them will be able to help with "high task complexity". Note that where the developer falls on the former spectrum is relative to the task they need to carry out. You could be the lead developer for the mission-critical Java application your organization owns but, if you are trying to write tests for a new Rust application you are toying around with, you may sit on the far left of the "knowledge level" spectrum.

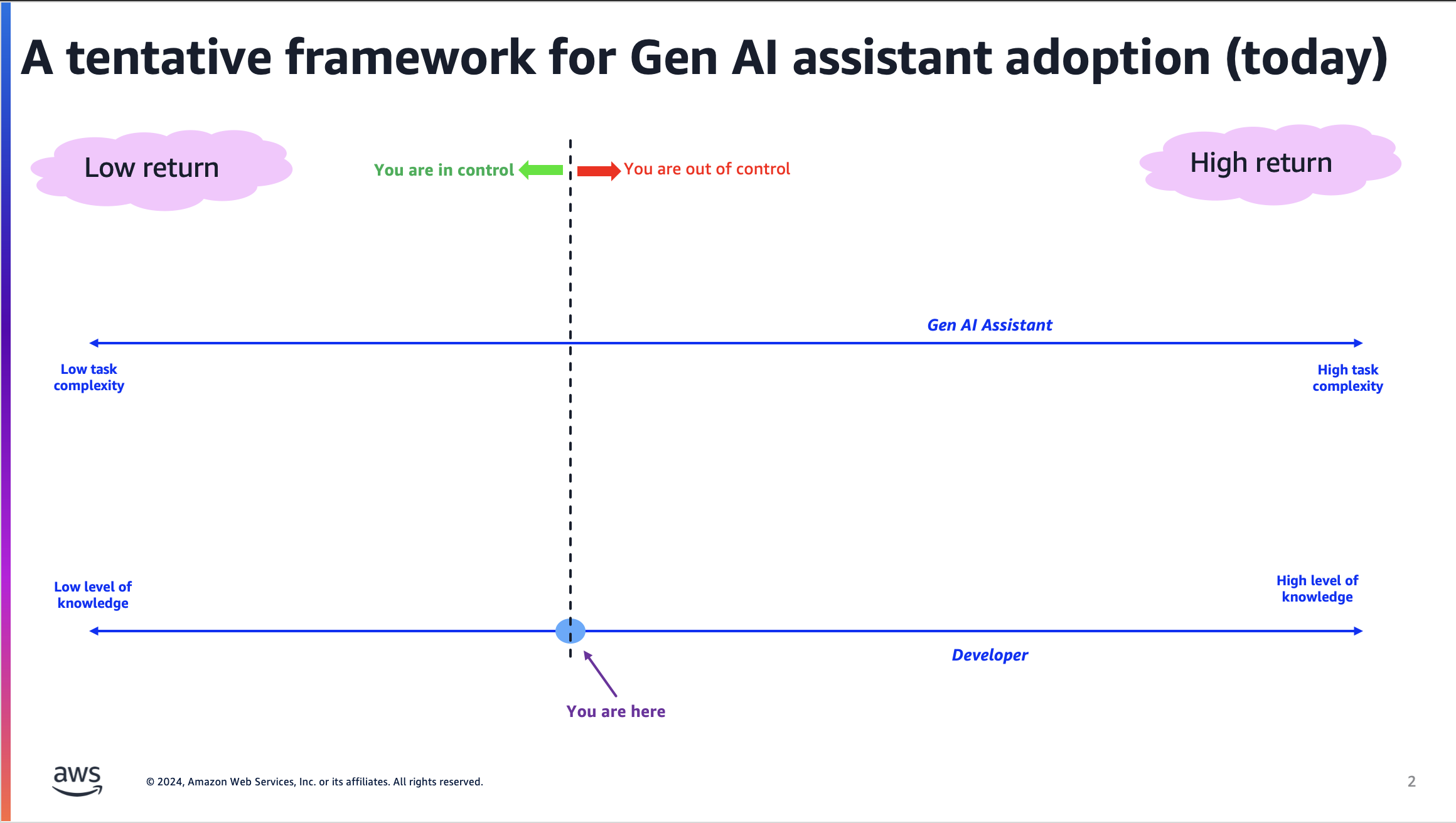

Being in control Vs. extracting value

Once you figure where you sit on that spectrum, the next important observation is that there will be a tension between two very important aspects of leveraging assistants: 1) being in control ("can I trust assistants?") and 2) extracting value from them ("are these assistants useful?"). These two dimensions conflict with each other. While, on one hand, you may want to extract as much value as possible (i.e. high return), you do not want to completely lose control. Similarly, while you want to be in control, it wouldn't make sense to use an assistant if the value returned is not enough.

The parallel I usually use here is flying a plane with autopilot. It's ok to use the autopilot if it can remove the undifferentiated heavy lifting of flying 10 hours straight over the ocean as long as you know you are always in control and can disable it should something happen that needs your attention. I, for one, would not (and could not) fly with autopilot because... I do not know how to fly a plane on my own.

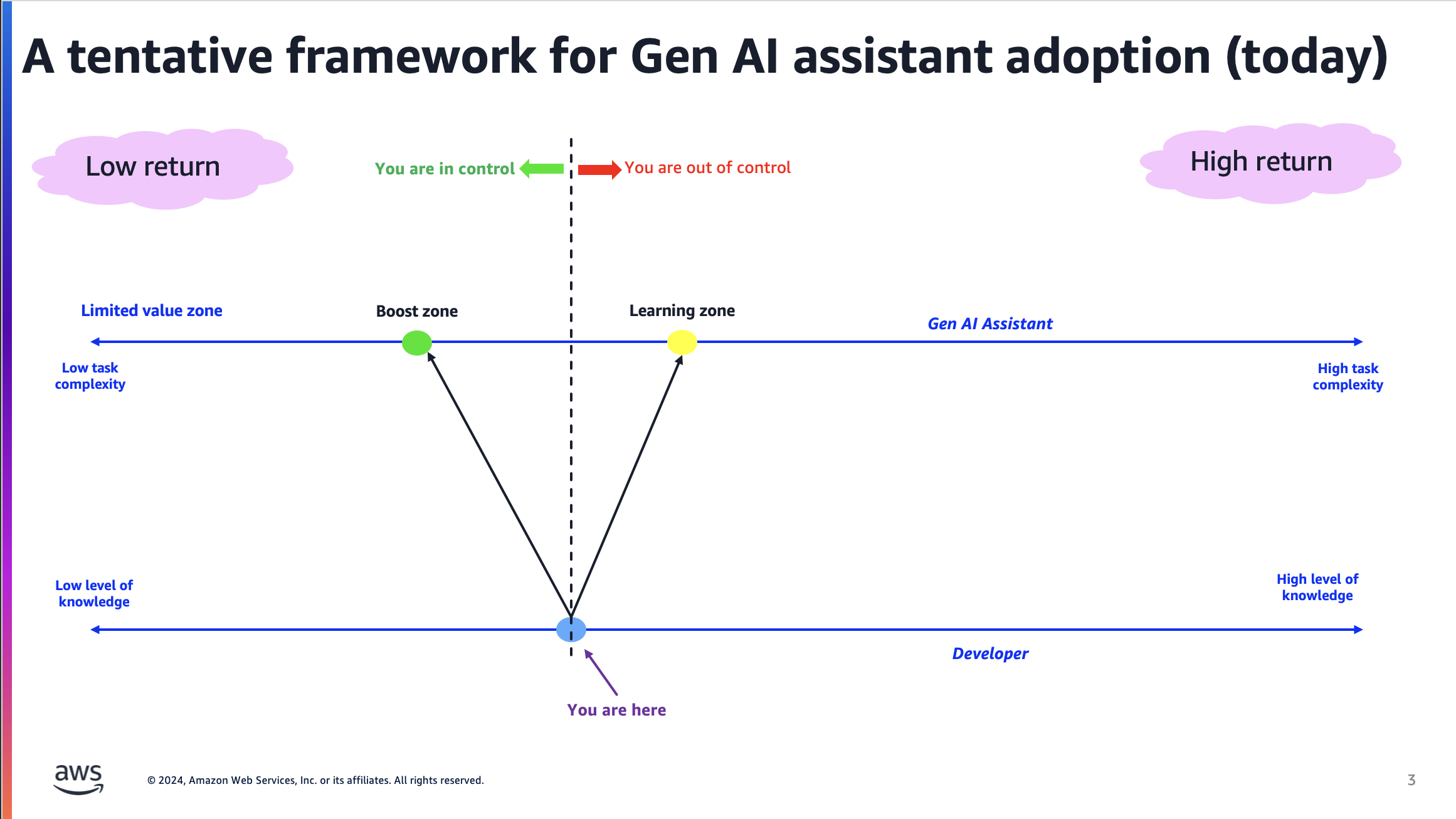

The "zones" where you want to be

Given this context, you can start thinking about how to use an assistant. There are a couple of "zones" where assistants may make a lot of sense. I call them 1) the "Boost zone" and 2) the "Learning zone".

The boost zone is where you can leverage the assistant for tasks that are close to your skill levels and where you can still be in full control. You could do everything there, but you do want to leverage an assistant to boost your productivity and save time.

The learning zone is where you stretch a bit your skills and leverage the assistant to help you at a level of complexity you are not fully familiar with. You are however close enough to your knowledge that you can use this as a learning opportunity and a way to explore uncharted territories (that you could easily verify for accuracy). I tend to think of the learning zone as the 2024 version of "searching the Internet for something you don't know".

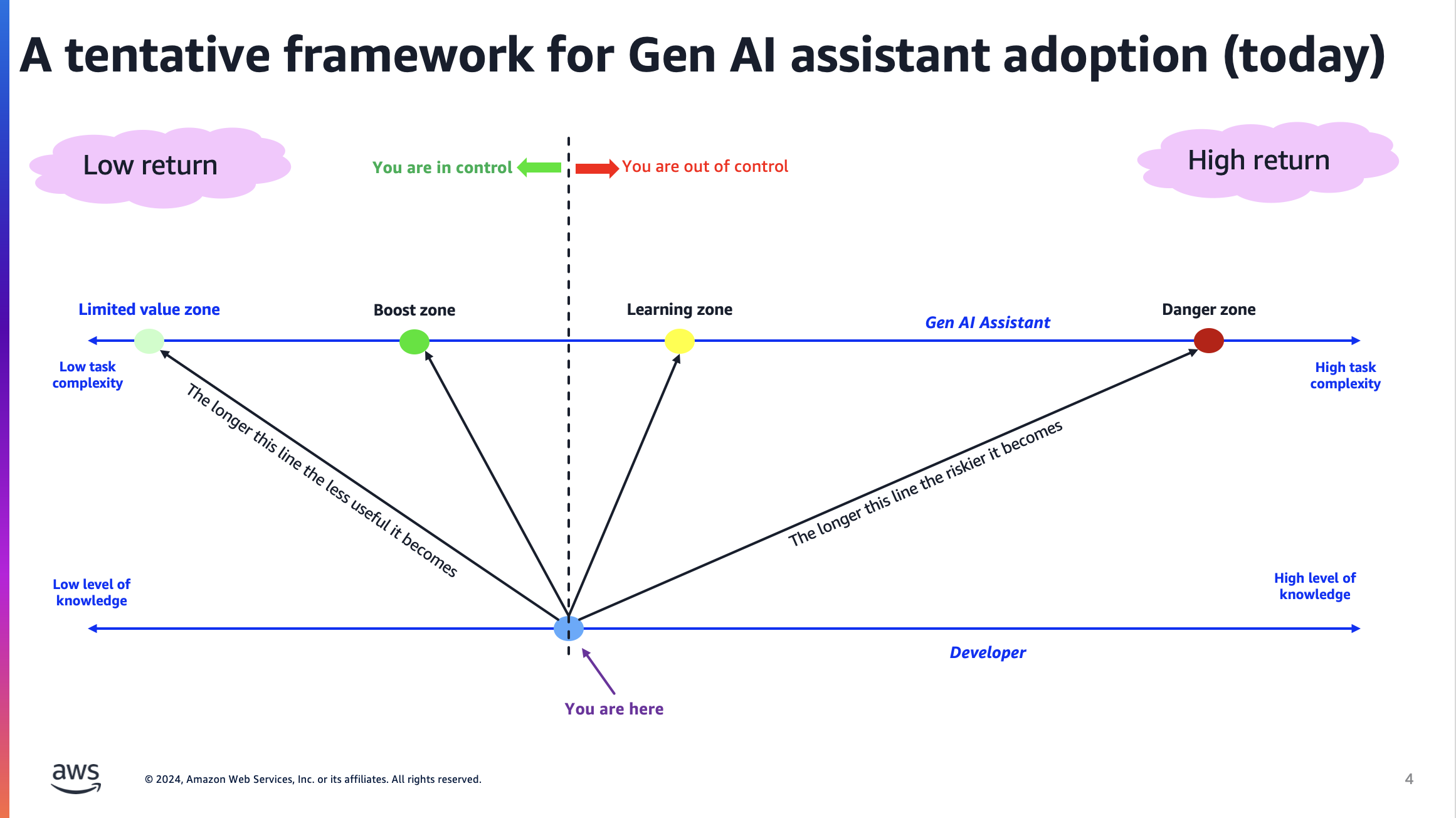

The "zones" where you may NOT want to be

Just like there are zones where you want to "hang out", there are also zones where you may not want to be. I call them 1) the "Limited value zone" and 2) the "Danger zone".

The limited value zone may not be worth the money you are spending for an assistant. The value you are extracting is so low that it may be faster to do it yourself without having to delegate.

Note that there may be tasks that could be simple in nature but that, at scale, may become "complex" or at least time-consuming. Think about doing something relatively simple (such as a trivial software upgrade) but having to do it in a repo across hundreds if not thousands of files. In this case the complexity is more around the amount of work required (automation) rather than the challenge associated to a single task (knowledge).

The longer the line is from you and the limited value zone, the least valuable the assistant becomes.

The danger zone sits at the exact opposite of the assistant spectrum. While you can extract the most value from leveraging the assistant there, you need to be aware that you are losing control. In fact, the longer the line is between you and the danger zone, the riskier it becomes. This is not to say you should never be in this zone. Perhaps you are doing some extreme experiments or perhaps your chain of reviews is such that it may mitigate the risks of you working in a zone that may be out of your personal control.

The area of value

The last visual suggests where you want to be or, as I call it, the area of value. You want to operate somewhere around the boost zone (thus improving your productivity in a very controlled manner) but you also want to stretch your comfort zone to explore how to do things you are not familiar with (without taking too much risk).

Final considerations

This framework is a work in progress and definitely a point in time view. I am definitely part of the "Generative AI assistants won't replace developers" team. Although I am pretty sure that the way I think about this adoption model will change in the future as these assistants will get better and better and the trust concerns will morph over time. Feedback is more than welcome.

If you haven't started using these assistants, Amazon Q Developer offers a generous perpetual free-tier that allows you to use it in your IDE, on the AWS Console and in the CLI.

Jump to this page to get started and enjoy it.

Massimo.