Using the Amazon Q feature development capability to write documentation

I have long been saying that generative AI english-to-code capabilities (that is, the ability for an assistant to produce code based on a prompt) are overrated - but very useful - while the code-to-english generative AI capabilities (that is, the ability to explain a piece of code) are underrated (and likely even more useful).

I am obviously exaggerating to make a point, but I do believe that there is an incredible amount of untapped value that can be created by developers using generative AI that goes beyond the notion of producing code. It's also much easier for a generative AI agent to write good documentation and explanations than it is for a generative AI agent to produce quality code.

To this end, I have started using the Amazon Q feature development capability to do that. In essence using this capability to write documentation instead of developing code (yes, naming is hard). The Amazon Q feature development capability is designed to assign a task to Amazon Q that can can be carried out asynchronously. This is in alternative to execute tasks using a chat interface where the user remains in control of the flow and the task gets resolved in a more transactional way.

For the records, this capability is available today in CodeCatalyst (here is a good blog that talks about it) as well as in the IDE (here is the documentation page for this capability). In CodeCatalyst, Amazon Q feature development requires a Standard tier subscription while, in the IDE, it requires an Amazon CodeWhisperer Professional license. Check here all the Amazon Q pricing details.

Amazon Q feature development comprises two separate phases: a planning phase where Q outlines a strategy to execute the task and an execution phase where Q implements the strategy. Note that in CodeCatalyst these two phases are available (in public preview) but, at the time of this writing, for the IDE version of the capability only VS Code is supported.

I will spend more time writing about this capability in the future because I like it a lot. If you are interested in a traditional use case where it could be helpful, AWS Hero Matt Lewis has a great blog post about all Amazon Q for Builders features including the feature development capability. However, in this blog post, I want to focus on demonstrating how to use it "weirdly" (that is, writing documentation).

Writing a README file

The first example involves writing a README file in a folder of a git repository. A few years ago I built this repository to demonstrate how to deploy an application to AWS AppRunner. I am quite happy with the README.md file at the root of the project but this repository has a preparation folder in it that contains the code required to prepare the infrastructure to deploy the application. In a real life situation this would be proper IaC, but I took a shortcut and built a bash script for that (shame on me). The README.md file in that folder is border-line embarrassing.

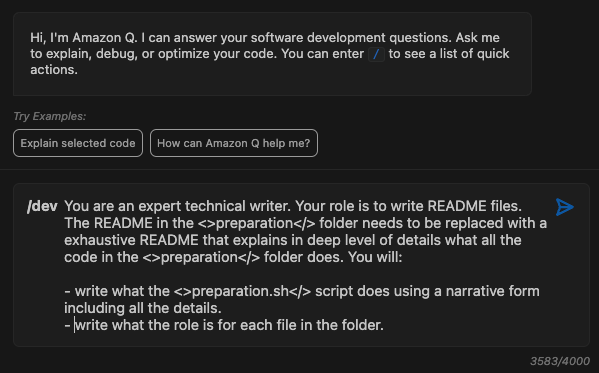

This is my intent (err... prompt):

1You are an expert technical writer. Your role is to write README files.

2The README in the <>preparation</> folder needs to be replaced with a exhaustive README that explains in deep level of details what all the code in the <>preparation</> folder does.

3You will:

4

5- write what the <>preparation.sh</> script does using a narrative form including all the details.

6- write what the role is for each file in the folder.

For this first example, I want to use Amazon Q in the IDE.

First I will open my repository in VS Code, then I will prompt Amazon Q using the /dev directive (which triggers the feature development capability) about my intent:

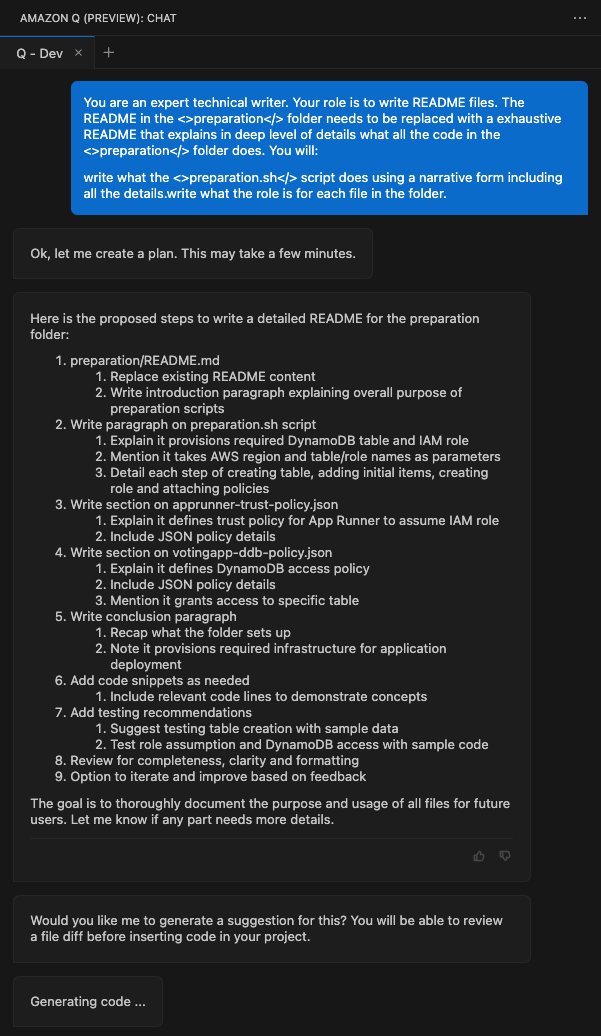

Amazon Q comes back with a plan about how it's going to implement the "feature" (again this capability is really geared towards "writing code", note how the plan includes some "testing" recommendations"):

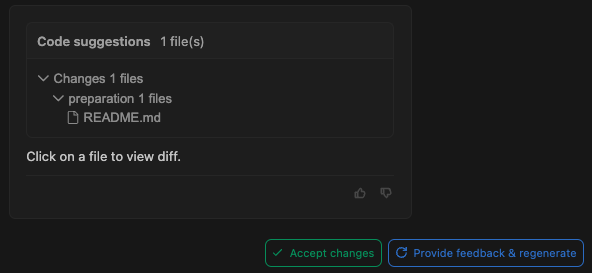

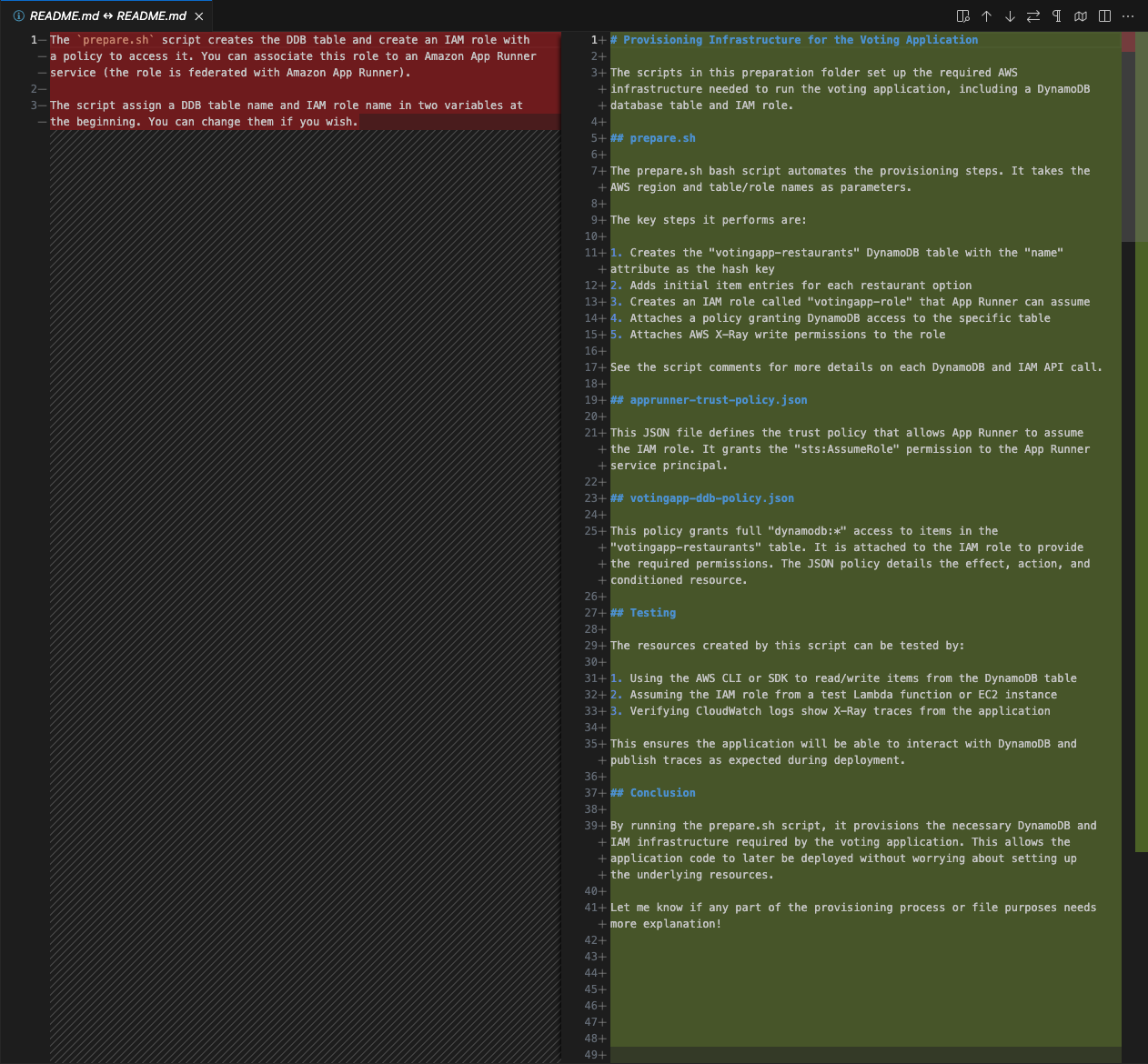

Once the second phase is concluded, Amazon Q proposes a view about the files it has either created or modified. In my scenario a minimal README file already existed. Once I have reviewed the proposal, I can decide if I want to accept the proposal or if I want to steer the result providing feedback in the chat and re-generating the output:

To review the proposal you simply click on the file you want to review and VS Code opens a diff view. In this case note how the README got expanded with a lot more information about all the files included in the preparation folder:

What you would usually do at the end of this process is accepting the changes proposed and optionally tweak them before committing the changes and pushing them to the repository.

I am still iterating and learning on which prompt works best. This one seemed to produce documentation that I considered good enough for my needs. Note that some of the standard You are a or Your role is may not be required because they are already often part of the LLM system prompt in these class of assistants. Based on my tests, these additional directives seemed to lead to better results though.

Documenting Python functions

The second example involves adding comments / explanations to the Python functions in the app.py file in the root of the README of the same project. This file has no comments and, while the code is straightforward in this example, you may have situations where you wish your code was better documented.

To show this, I am going to use Amazon CodeCatalyst because the same Amazon Q feature development capability is available there. The way you trigger this capability in CodeCatalyst is different from what we have seen in the IDE. You would create an Issue in the CodeCatalyst project and assign it to Amazon Q. Then Q will go through a similar process and will eventually open a PR (Pull Request) with the changes proposed.

I found that using different prompting leads to different results. For example, this is one of the first prompt I started with and this did NOT lead to good results:

1You are an expert Python developer. Your role is to document programs.

2The file <>app.py</> needs to be augmented with code comments.

3Your task is to parse the file, find all the Python function and write a 3 lines description about what each function does.

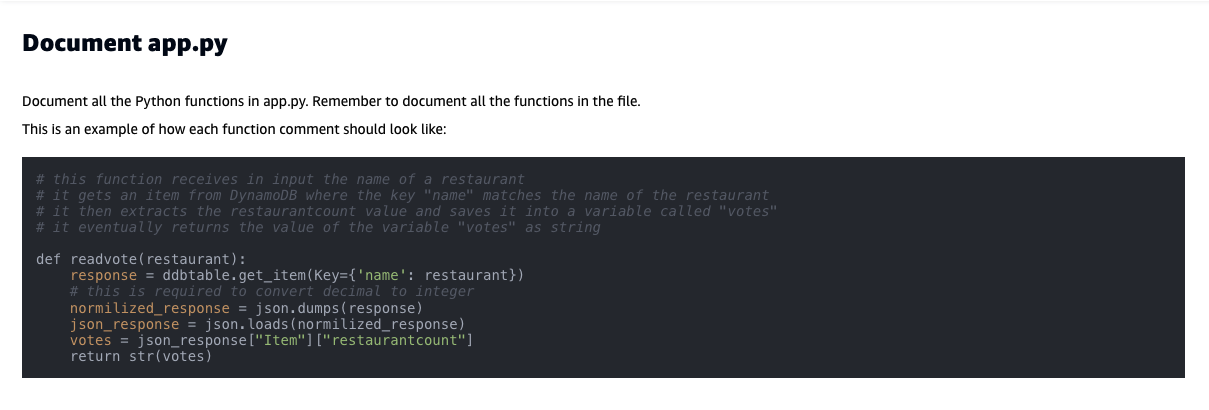

After some trial and error I found that the following prompt worked better. This prompt includes an example of how I would like the comment to be constructed:

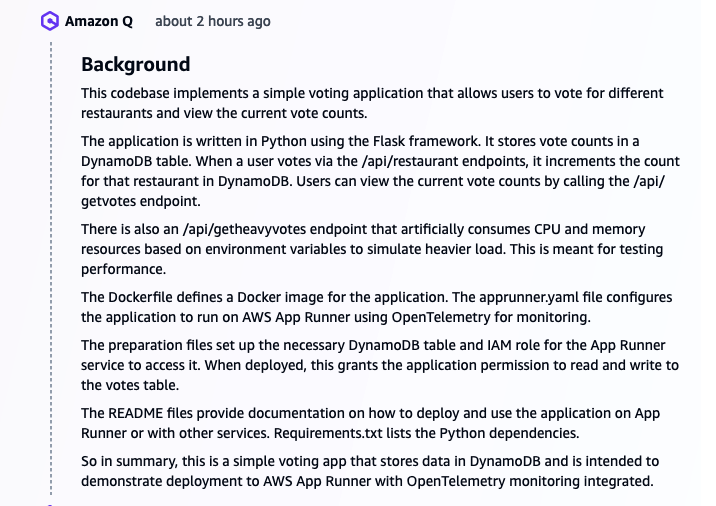

First, Amazon Q interacts with the issue and post a generic summary of the content of the repository:

Then, Amazon Q starts to strategize and posts a plan of actions in the same issue:

Note: the formatting is not great due to the interpretation of some of the markdown I have used in the prompt, I suspect. Also, note how the plan ends again with some test actions because this capability is geared towards producing code, and I am stretching a bit the use case it's being optimized for.

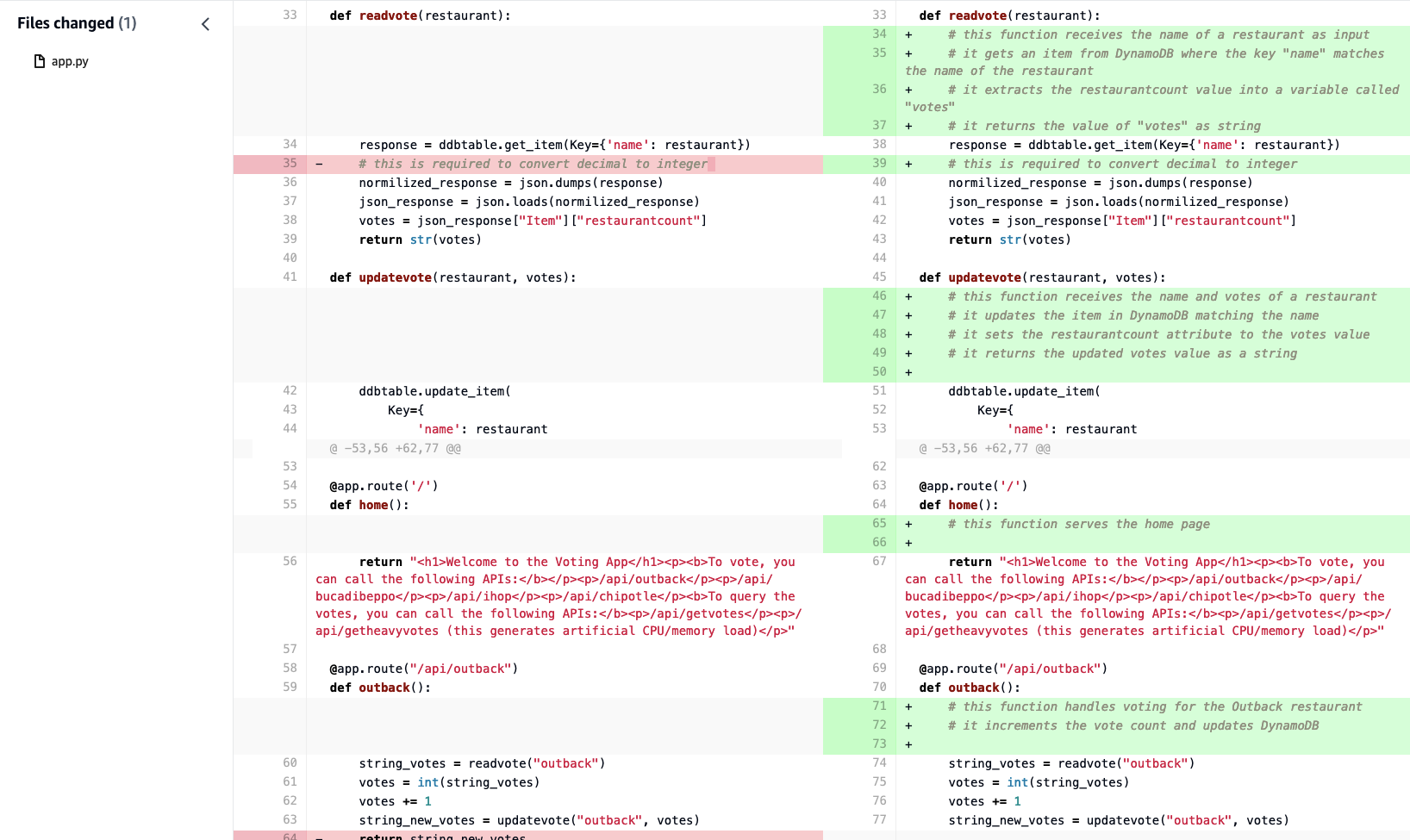

This is where you can collaborate with Amazon Q to steer the plan. In this particular situation I have decided to just Proceed and open a PR. If you open the PR and open the changes this is what you will see:

As you can see Q has added comments in-line that describe what each function does.

At this point you have the option of commenting the changes and having Q making adjustments to what it generated, or you can simply treat this PR as any other PR.

Conclusions

In this short blog post I wanted to show how generative AI can potentially be of help with use cases that go beyond generating code. The examples I have shown are rather basic, but hopefully they give you an idea of where these assistants may directionally end up going for more sophisticated use cases and requirements in the context of their code-to-english capabilities. You can already use Amazon Q (and other assistants) to "explain" pieces of code you have in focus but these asynchronous capabilities bring in new workflows and solutions that cannot be achieved transitionally in a chat interface.

If you spend hours documenting code (or worse if you chose not to), the future might be bright(er).

Massimo.