Open standards, open source, OpenStack and the TCPIP of Cloud APIs

A few weeks ago Rackspace and NASA announced an initiative called OpenStack aimed at providing an(other) open source alternative for building public and private clouds. This generated some reactions in the open source community like this one from the OpenNebula team. By the way do not confuse OpenNebula, one of the other open source cloud implementations mentioned, with NASA's Nebula, an open source compute-related cloud implementation that is part of the OpenStack announcement along with Rackspace's CloudFiles, an open source storage-related cloud platform. If you find it hard to follow let's try to remove the redundant "cloud" and "open source" words and let's try to express the concept in a mathematical format: it's OpenNebula Vs Nebula + CloudFiles = OpenStack. Easy, isn't it.

My colleague Winston Bumpus already addressed in this post the difference between open standards and open source so I am not going to talk about that. Not to mention that Winston is at least an order of magnitude more authoritative than I am when talking about standards. Winston is director of standards architecture at VMware and president of the Distributed Management Task Force Inc. (DMTF).

Instead, I'd like to talk about what turned out to be the two major talking points around OpenStack. The first one goes like "OpenStack is open source hence it's free". The second one goes like "OpenStack is open source so I can customize it to my needs and use this customization as a differentiator in my industry".

I am not going deep into the "free" argument as this has been a never ending discussion for years. If "free" was always better I say, in your corporate IT, you'd be all using Ubuntu, MySQL, OpenOffice and the like whereas I'll go ahead and speculate you are most likely using all flavors of Windows, Oracle and Office. Note I don't have a political agenda in saying this because if I had to say something against closed source software I'd have all my vSphere colleagues hunting me down. Same treatment from my SpringSource and Zimbra folks if I had something bad to say about the open source software model. In the final analysis I think that the choice boils down to the rounded value of any given product. And I am using the word value loosely here, including things like features-set, support, roadmap integrity, commitment, openness, compatibility etc. For many organizations these are more important than getting something "for free". I apologize with the NASA and OpenNebula folks for having been a bit picky in my opening but I wanted to make the point here that simplicity is a value too.

The second talking point ("it's open source so I can customize it") is way more interesting. First this is an argument that pertains more to the service providers building public clouds than enterprise organizations building their private clouds. Having worked for about six months now with service providers across Europe I have seen and heard many interesting things. The first thing I've heard is "we don't want to develop the cloud stack ourselves. It's not our business. We'd rather use an out-of-the-box product and build on top of it". In addition to this, I have seen at least a couple of high-level cloud stack prototypes built on top of vSphere from two of these service providers and, believe me, they all look the same from a core functionality perspective: multi-tenant support, web portals, catalog management and external programming interfaces. Sure the branding of the portals looked very different (obviously) but if you abstracted yourself for a minute from this, you'd note that the logic, the flow, the processes involved from on-boarding a user all the way to having the same user being able to deploy a workload were all very similar. Long story short, I have two data points at the moment: the first one says these service providers don't want to develop these core functionalities in-house and the second one says that their internal mockups and prototypes look very similar from a functionality perspective.

This doesn't sum up I thought. Assuming this is representative of what in general service providers think, what's the point of having an open source stack that you can customize? You don't want to hack it because you don't want to build the stack in-house. And you don't need to hack it in the first place because you are essentially building the same thing anyway (even starting from scratch). You may be arguing that this is not in-house development but it's more like customizing to your needs an existing software. To this point I really think the burden is not so much in doing the one-shot customization but it's rather in maintaining it over time. Doing so, you are essentially forking from the vanilla open source software and it may be difficult to incorporate new evolution of the vanilla platform in the long run.

The whole point here is that, obviously, service providers need to differentiate from each other. However an IaaS cloud offering is so articulated that one should wonder whether it is possible to differentiate at a level which is (well) above the core multi-tenancy cloud functionalities. In fact there are tons of things that a service provider can do on top of the cloud stack without having to do something inside the cloud stack providing the technology backbone. After all many service providers are using many out of the box products to build their offerings and I am not sure anyone has ever thought about writing (or customizing) their own version of Oracle, Windows, vSphere, Tivoli. And I bet they are even using open source software (just because it has value) without necessarily having recompiled a tweaked kernel, although possible.

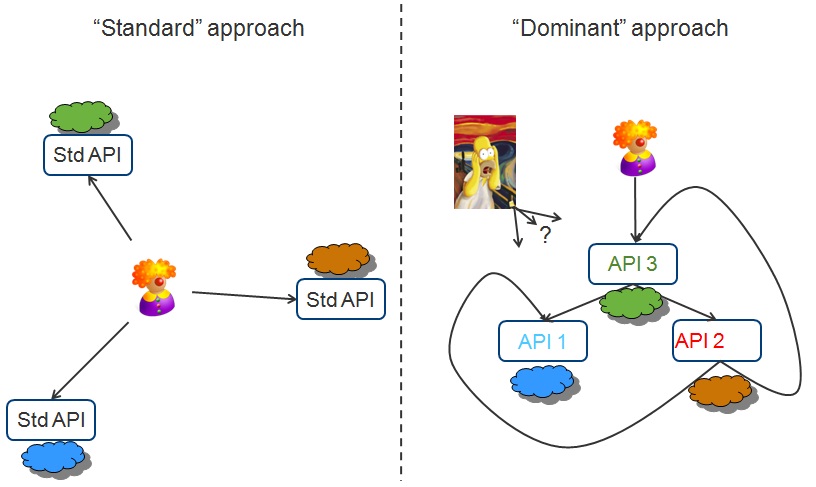

And while I am at this I'd like to touch on the cloud APIs and on openness as well. I am not a programmer so this is not my territory however I'll give a stub at it and say that there are really a couple of approaches I have seen so far in the cloud space. I define the first one the "standard" approach whereas the second one is the "dominant" approach. In the standard approach, not matter what the actual product implementation is, the interfaces are common and all technology vendors have agreed on a common "language". This is the standardization effort that the DMTF is coordinating. Right now VMware submitted the vCloud APIs to the certification body in an attempt to define a common standard everybody could agree upon to make various cloud stacks interoperable.

With what I define the dominant approach, some vendors are trying to achieve the same result without going through a standardization process but rather using an abstraction/wrapping model where they essentially tell ISVs that their cloud APIs 3 is able to abstract cloud APIs 1 and Cloud APIs 2. In this case ISVs (and users) have to write only once to "reach many". The funny thing is that this is typically perceived as something that avoid lock-in. The reality is that you locked-in into neither API 1 nor API 2, but you end up being locked-in into API 3 anyway. There is no way out from this other than using the standard approach I mentioned before since there is not any "master" and "slave" concept.

Also, the other problem with the dominant approach is that we are in the so early stages of cloud computing that cloud stack vendors implementing APIs 2 may try a similar approach (and win the master position) abstracting APIs 3 and APIs 1. This would leave users and ISVs with the dilemma of which APIs they should develop against. The picture below summarizes and visualizes this recursive abstraction concept:

Interestingly enough this is already happening. Last time I checked, for example, Red Hat's DeltaCloud project was able to abstract a variety of cloud interfaces including Amazon EC2, GoGrid, OpenNebula, Rackspace and others. At the same time OpenNebula has recently announced an adaptor to connect and abstract Amazon EC2 and DeltaCloud resources. This sounds like an example where both the DeltaCloud and OpenNebula interfaces are masters and slaves at the same time. So now what are you going to do? What are you "standardizing" on?

The way I see this evolving is that these cloud APIs will have to be like TCP/IP. No matter what the product is, it implements the very same language so that heterogeneous systems (in the case of TCP/IP) or different service providers (in the case of clouds) will be able to interoperate transparently. And this requires a certain level of flat standardization, after all TCP/IP didn't become so widely adopted because it was able to abstract IBM's SNA, Novell's IPX/SPX, Microsoft NetBEUI and others.

The last thought for service providers is this: while certainly this level of standardization will make easier for customers to switch from one vendor to another (which is something understandably you don't like), I'll look at this upside down: think about how many public cloud customers you will be able to get on-board simply because they will be confident that they can leave you whenever they want. Think about how many customers are not buying into public clouds today, simply because they know it's easy to get in but it's difficult to get out. Why do you think x86 is so popular and proprietary platforms are on a demises? The market rules in the end.

This is going to be a win-win for everybody.

Massimo.