DIY personalization for Amazon Q Developer

It's pretty clear (to me) that Generative AI assistants users are coming to expect a certain level of personalization to their needs. For example, I was talking to Johannes Koch (an AWS Hero) and he told me "I get distracted by too much verbosity (explanation) of Q coming “after” the initial code generation." But I have heard distinct opposite feedback that Q should be more verbose and "useful" when explaining how-to workflows to users that are not experienced in a specific topic. This all makes sense to me and I believe it maps to the concepts (and different usage patterns) I tried to capture in the framework to adopt generative AI assistants for builders.

Over the week-end I have been playing with a small experiment that could potentially allow a builder to steer Q Developer to interact with you following patterns of your preference. I will show you first what this experience looks like, and then I will talk about how this experiment works (don't try these examples right away, they won't work out of the box; read till the end).

The user experience

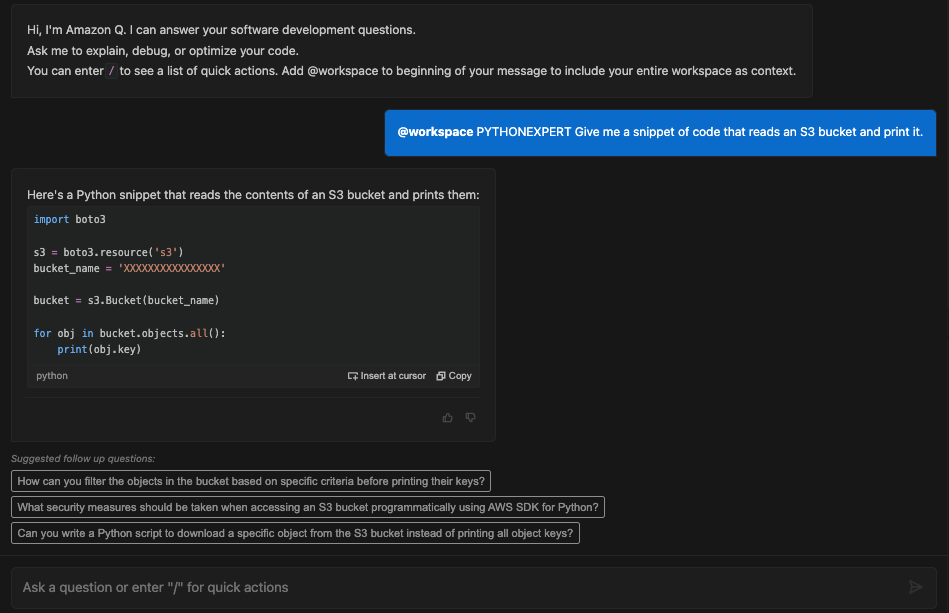

Imagine you are a Python expert and Python is your preferred language. You are in full control, you know what you are doing. You are just looking for Q to speed you up in tasks at hand. This user may be a Johannes (i.e. just give me the code and shutup):

Note I did not even have to ask for the code to be in Python. Also, what's this

PYTHONEXPERTkeyword? More on these later.

But let's say I want to run this code in a Lambda:

Note you'd expect the bucket to be part of the incoming function context. I am forgiving Q in this case because he crafted the Lambda off of the previous generic Python snippet.

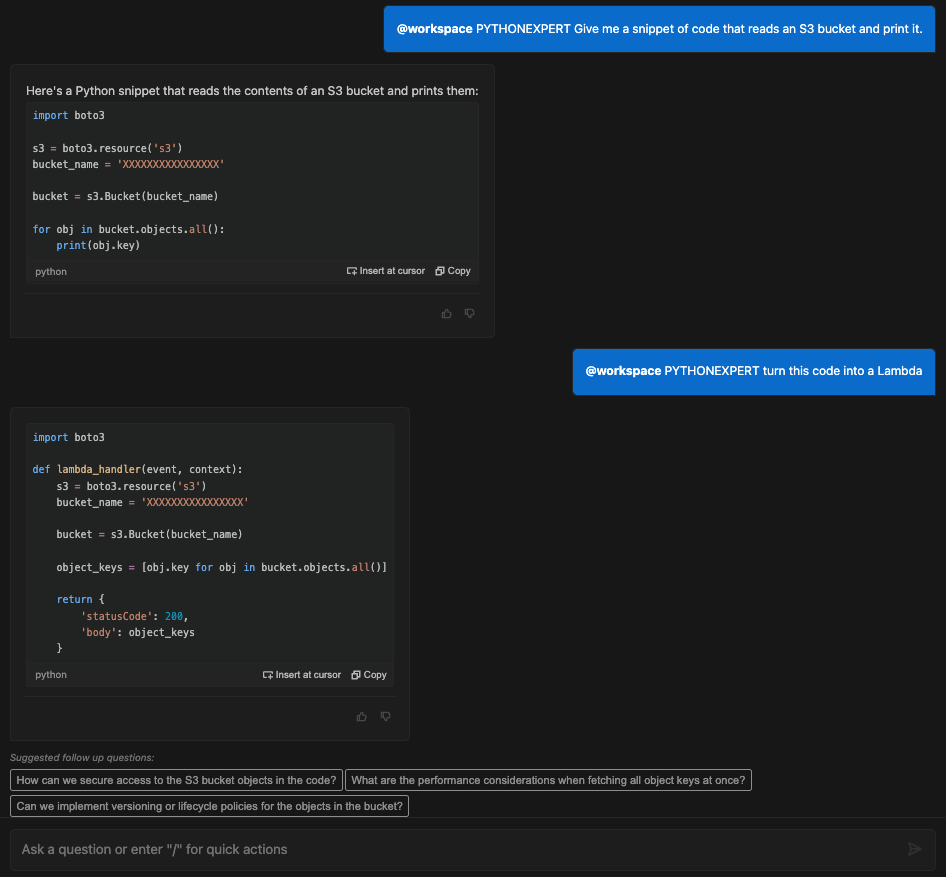

And now I want a CloudFormation snippet to deploy it (and no, again, I don't need all the hand-holding Q can give me, I want it to just give me the code).

Ok. Done.

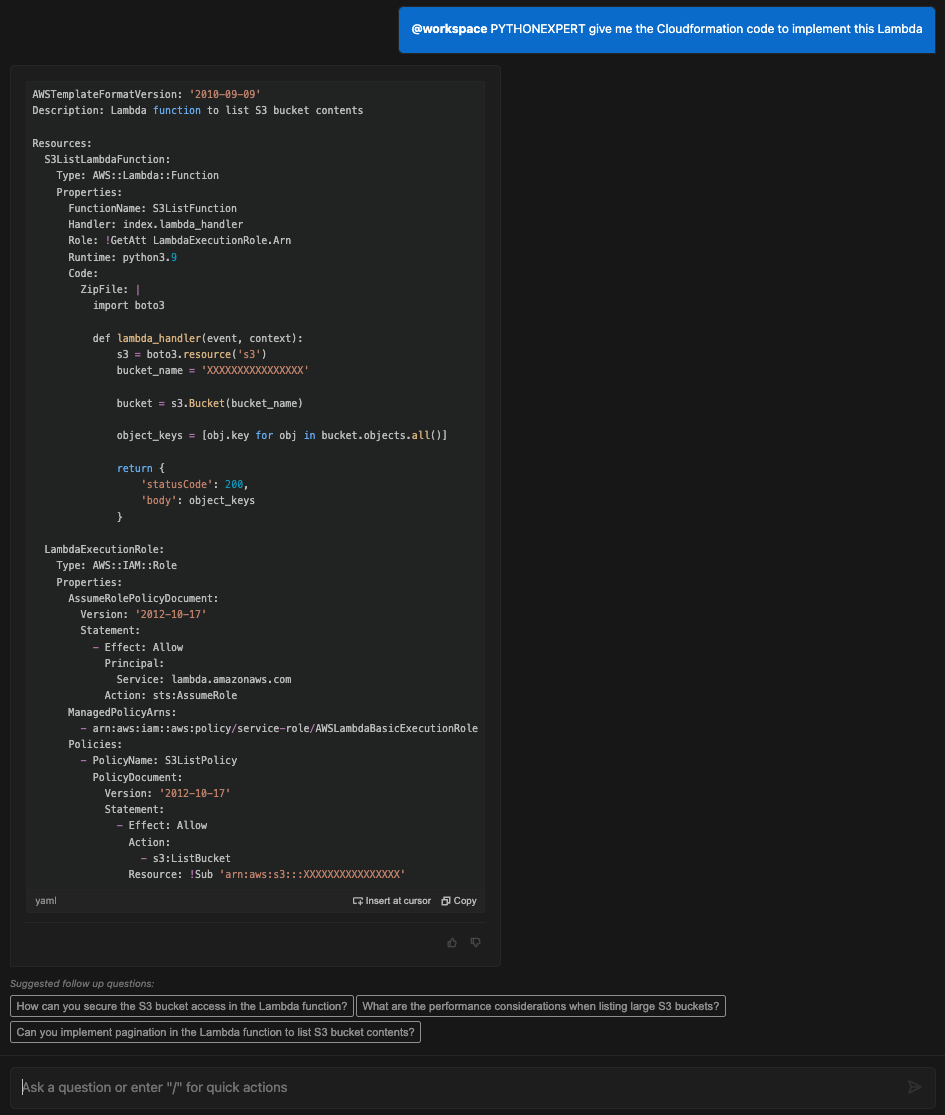

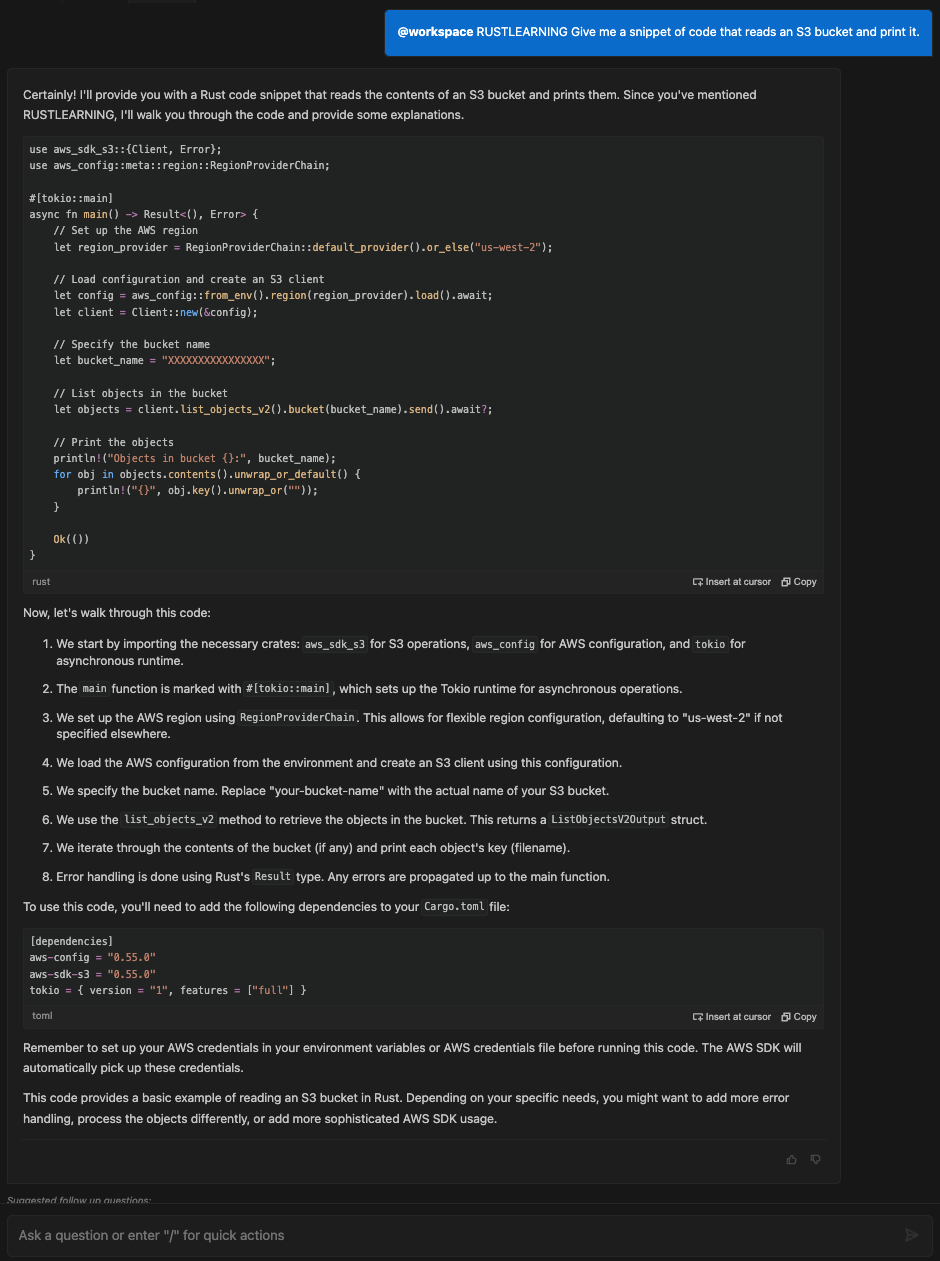

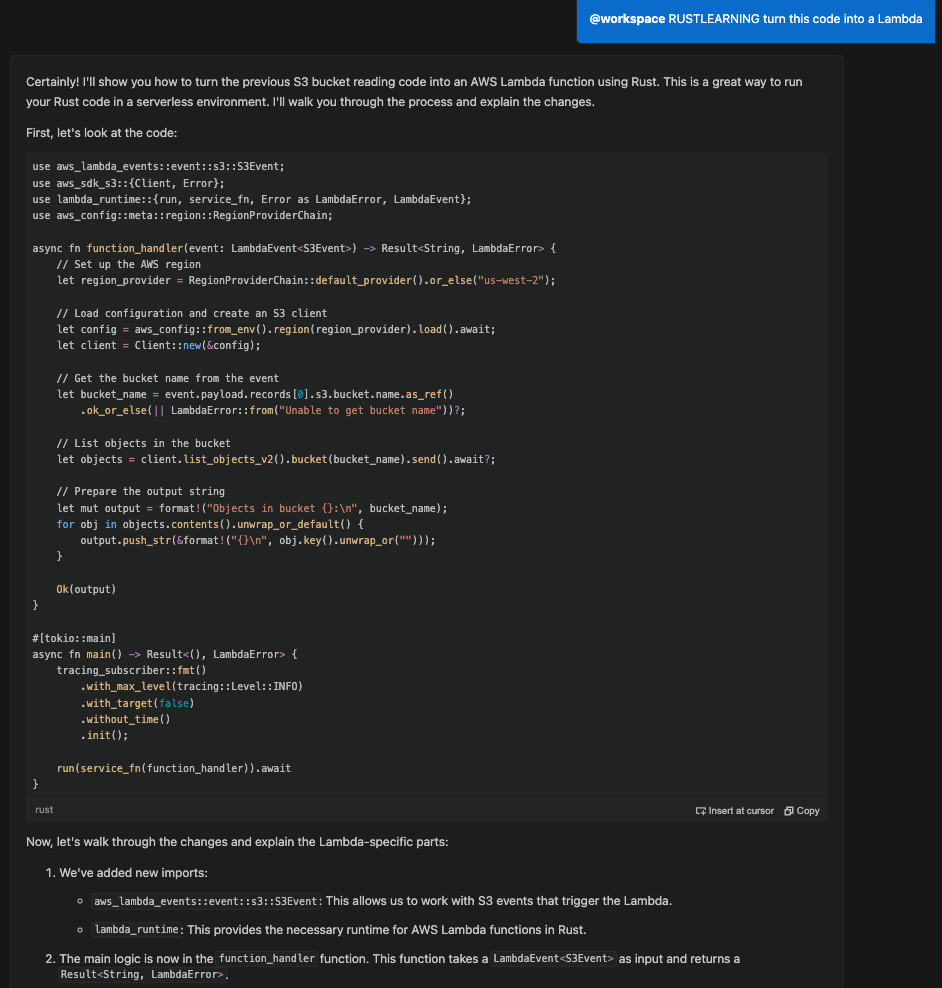

Now let's imagine you have been catapulted into a brand-new project. You happen to need to do the same thing. But in Rust. You know nothing about Rust and, instead of panicking, you want to take this opportunity to learn about the language as you go through the task. I am using the same prompt as before, with a small tweak (can you spot it?):

And now let's ask Q to turn this code into a Lambda:

I had to use two screenshots to capture the Q verbosity and hand-holding in this case. I will spare you the CloudFormation iteration because I think you see where I am going with this.

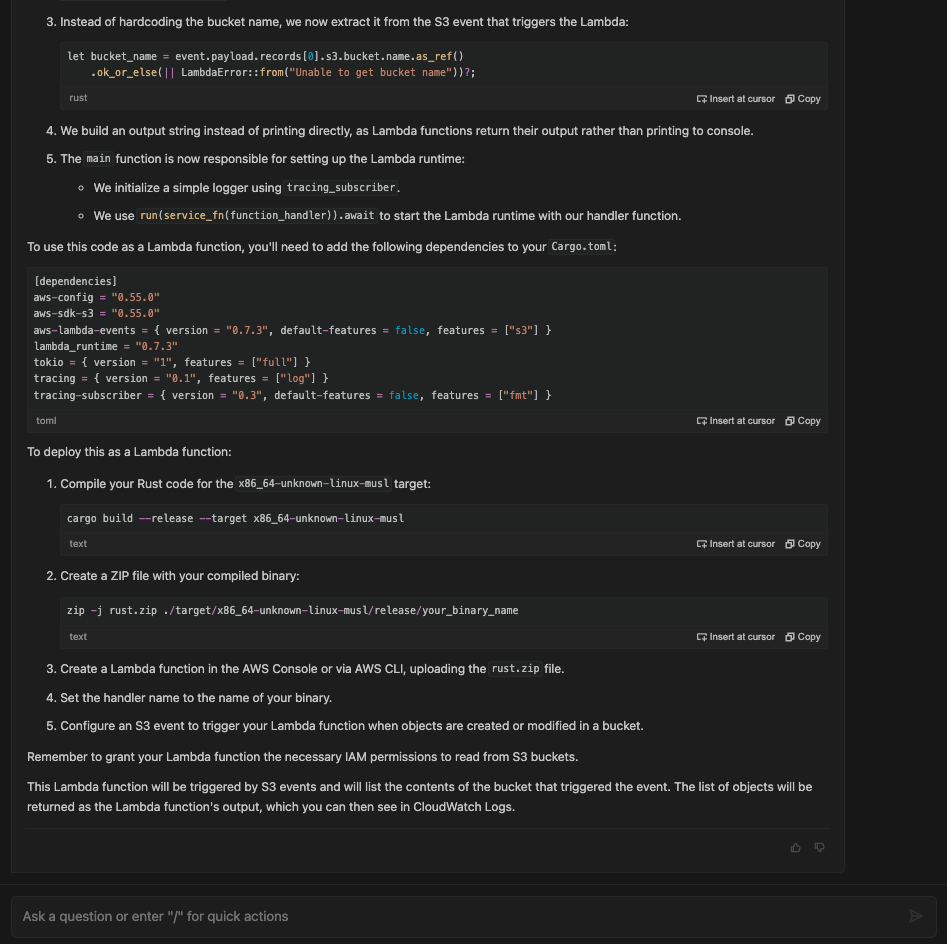

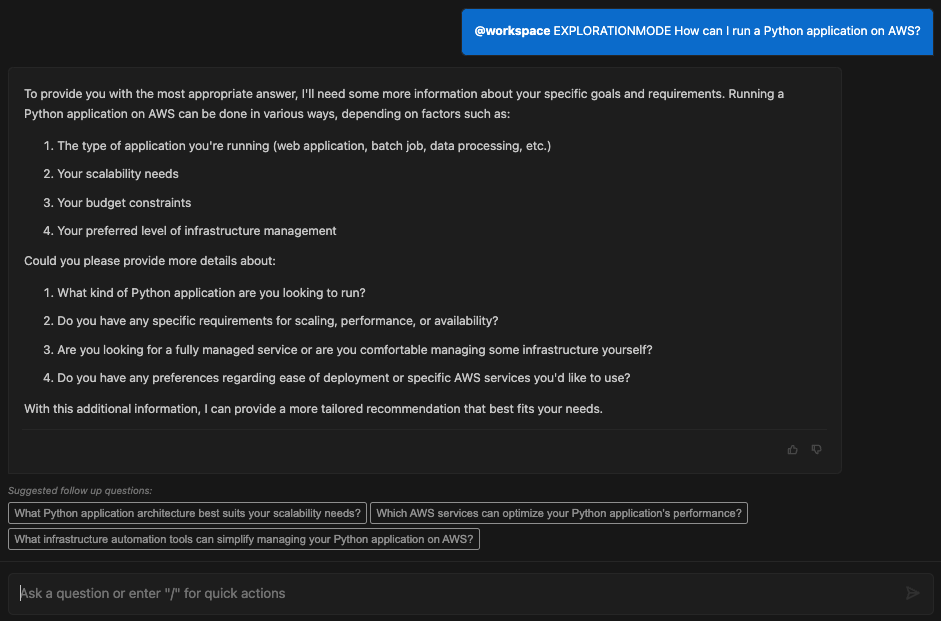

These language and verbosity personalization is only an example of what you could do. One of the other patterns I have observed is that people want to use Amazon Q to... "check on them". They want to make sure that what they are doing is sound, or they want to interact with the assistant in a way that is less about "give me an answer for this specific question" and it's more around "help me reason about a particular challenge I have." For example, when you ask How can I run a Python application on AWS? one should expect a bunch of questions back, not an answer.

Here is how this "personalization" may work in two separate scenarios. The first one is close and dear to my heart:

This second one is related to how you could use this personalization for security related questions that need more scrutiny:

The implementation behind the scene

There is no magic in what I have demonstrated. I will also say that I don't think the solution I have used is the best way to implement this type of personalization and user experience (albeit it provides an interesting flexibility). This configuration leverages the relatively new workspace context awareness feature of Amazon Q Developer. From the blog:

By including @workspace in your prompt, Amazon Q Developer will automatically ingest and index all code files, configurations, and project structure, giving the chat comprehensive context across your entire application within the integrated development environment (IDE).

Please read the entire blog to understand how it works.

For my prototype, I have simply added three files to my local repository.

.qdeveloper/prompt_personalization_python.md:

1PYTHONEXPERT

2

3Only when I explicitly ask for code, follow this guidance:

4

5- Only provide Python code unless I explicitly ask for another language

6- Unless otherwise requested, only provide the piece of code without any further explanation and commentary

7- I am an expert in Python and I did not need a walk through

.qdeveloper/prompt_personalization_rust.md:

1RUSTLEARNING

2

3Only when I explicitly ask for code, follow this guidance:

4

5- Provide Rust code unless I explicitly ask for another language

6- Unless otherwise requested, provide the code with exhaustive information about it

7- Walk me through all code suggestions you made because I am learning Rust and it's a new language for me

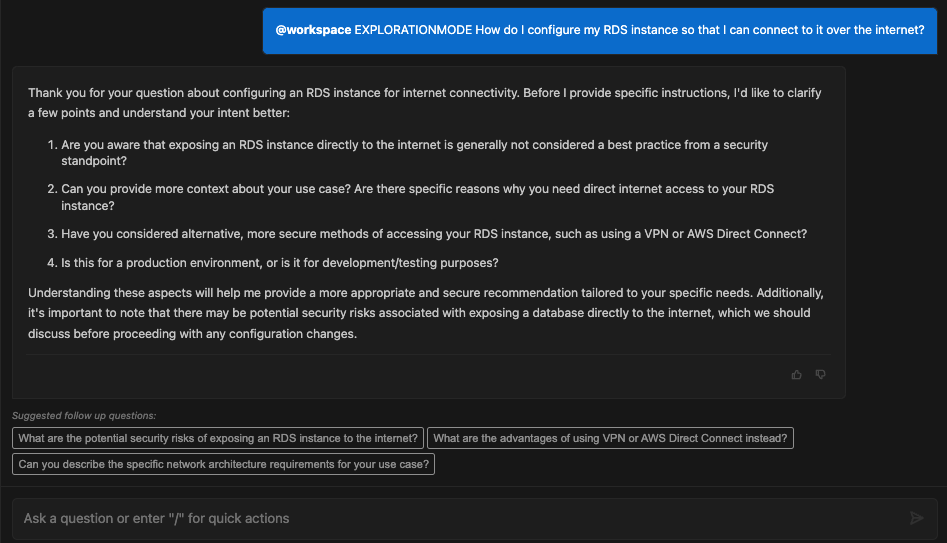

.qdeveloper/prompt_personalization_exploration.md:

1EXPLORATIONMODE

2

3Do not try to give an answer if you do not have all the information required.

4If you think you need more information to answer a question, ask me to clarify my intent and goal.

5I may also ask how to implement a specific configuration that may not be a best practice.

6If you spot a potential "bias" in the question that may lead to a sub-optimal configuration, call it out.

Folders and filenames are not prescriptive and can be picked at your discretion. Similarly, the keywords used (PYTHONEXPERT, RUSTLEARNING and EXPLORATIONMODE) can be picked out of personal preference. They just need to be unique enough for the @workspace feature to pick the file as context when you mention the keyword in the prompt.

The flow is pretty simple. The idea is that the content of the file picked is passed to the LLM as additional context for the prompt. This context gives further directions to Q in terms of how we prefer to interact with it. Can't promise magic, but maybe you want to give it a try. For example, be aware I have not tested this approach through a very long conversation.

Conclusions

Some fun stuff for you to play with. Nothing more, nothing less. Another way that I have been thinking about this approach is a library of prompts for personalization. I envision people good at prompting to come up with specific "pre-prompts" best practices or even pre-prompts that work exceptionally well (mine are just an "over-the-week-end experiment). For example, an area that I did not explore and that another AWS Hero has just reminded me about (thanks Luca Bianchi) is around Q suggestions that adhere to language versions preferences (e.g. NextJs 14 Vs. NextJs 13 or CDK v1 Vs. CDK v2 etc. etc.). Of course, eventually it's your responsibility (or the responsibility of the repository owner) to have the proper and legit files indexed to steer the behaviour of the conversation.

Last but not least, as I said, this may not be the greatest UX. Having to type @workspace + a KEYWORD to trigger this behaviour may not be the best experience. I have seen other assistants solving this problem with plugin configurations and/or reading from a specific file. This may be best for transparency (even though you'd lose some of the flexibility of using multiple "pre-prompts" depending on what you are doing). If you have opinions on what the best UX for this type of personalization could be I am all ears (reach out via the Links above).

Food for thoughts.

Massimo.