vCloud Director 5.1(.1) Changes in Resource Entitlements (Updated)

vCloud Director 5.1 has introduced a fair amount of new functionalities. One of those is a change in the resource allocation models. I have tried to capture those changes from vCloud Director 1.5 to 5.1 in a couple of tables. For those of you that are new to vCloud Director it may be a good idea to get a background and a complete explanation of how the various resource allocation models work. This whitepaper on vCloud Director 1.5 is a good source of information. Oh, at the end of this doc I added an allocation model selection criteria section (sort of) to try to make sense of all this complexity richness.

Kidding aside, it will sound complex, but this is the "tax" you need to pay for being able to provide (as a cloud provider) and consume (as a cloud consumer) virtual data centers instead of virtual machines. After all, flying a Boeing 747 is inherently more difficult than driving a Fiat Panda but it really boils down to where you need to go in the end.

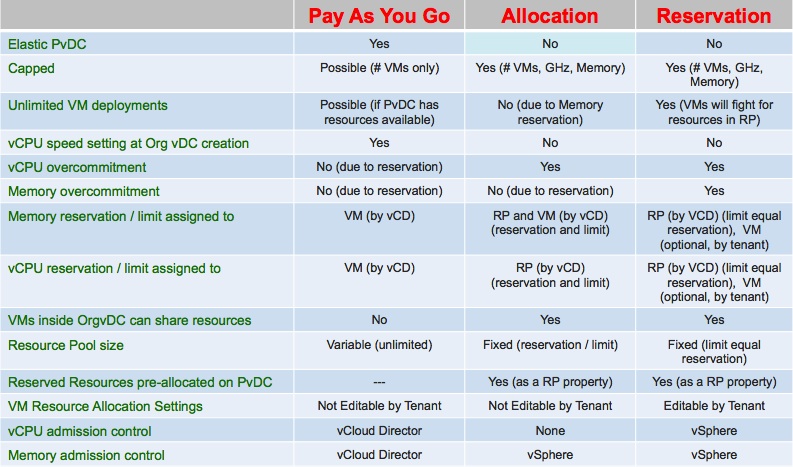

This is a summary of how the three models work with vCloud Director 1.5

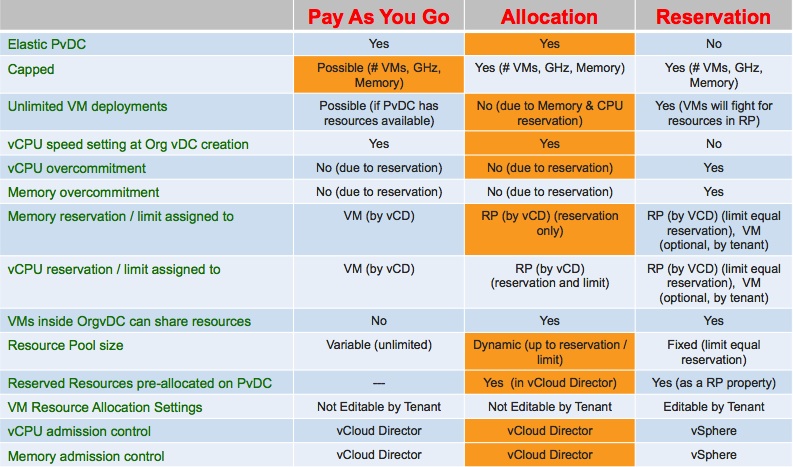

This is a summary of how the three models work now with vCloud Director 5.1. The yellow cells represent the changes from the previous version of the stack.

So let's start with the easy part. No change at all for the Reservation Pool model. Easy. Done.

There is only one small change in the PAYG model. Now the cloud administrator can create an Org vDC that is capped not only by the # of VMs but also by CPU and memory resources limits. This is cool because many customers (and Service Providers) liked the PAYG model but they needed to cap the tenant with something that was more sophisticated than the mere absolute numbers of VMs. vCloud Director 5.1 now delivers that capability to cap the tenant on a resources consumption basis. This is of course an optional and additional parameter that doesn't change the original PAYG model behavior in vCloud Director 1.5.

As you can see most of the changes (and dramas) come with the Allocation Pool model. This has generated some reactions from our customers (and Service Providers). More on this later.

Change or not to change, that is the problem

The vast number of changes have been introduced to support elasticity for the Allocation Pool model. For those of you new to this concept, VMware defines an Org vDC elastic when it can draw resources from all clusters that comprise a Provider vDC. Alternatively, when the Org vDC can only draw resources from the primary (or only) cluster in the Provider vDC it is defined, guess what, non elastic.

In an elastic Org vDC scenario, every time vCloud Director deploys or deletes a VM, it increases or decreases the size of the Resource Pools (from now on RPs) dynamically. And it can do this across different clusters so that the sum of all of those RPs dedicated to the tenant is reconciled at the vCloud Director level which now owns admission control for all of those VMs.

The good news is that, in vCloud Director 5.1, Org vDCs created with the Allocation Pool model are elastic. The bad news is that this requires some changes in the behavior of this model.

With vCloud Director 1.5, admission control and resource governance for the Allocation Pool model was delegated largely (but yet not completely) to vSphere. The drawback was that vCloud Director had to pre-create upfront a RP that mapped statically the characteristics of the Org vDC the cloud administrator was creating. And the only way to do this was to create a single, fixed size RP in the primary cluster of the Provider vDC. In other words: no elasticity.

What effect does this have on VMs deployed with vCloud Director 1.5 in an Org vDC created with the Allocation Pool model? vCloud Director 1.5 would set a reservation and limit on the VM being deployed based on the % of guaranteed capacity defined when the cloud administrator created the Org vDC. For example, if I have a 10GB of memory Org vDC with 50% guaranteed capacity and I deploy a 4GB VM, vCloud Director will set a 4GB limit on the VM and a 2GB reservation (50% of 4GB).

However, the way vCloud Director 1.5 managed CPU resources was a total different story. vCloud Director 1.5 didn't set any reservation / limit value on the vCPU deployed in the Org vDC created with the Allocation Pool model. The cloud consumer could deploy infinite vCPUs (well, ok..) and all of them would fight for the capacity of the fixed size RP backing the Org vDC.

vCloud Director 5.1 moves this on-the-fly resource manipulation at the RP level rather than at the VM level. This allows vCloud Director to treat RPs as dynamic entities (without having to create them upfront with a fixed size) and spread those RPs across many cluster.

Wait a moment, it's easy to move that memory manipulation from the VMs to the RPs. There is still one piece missing though: how can vCloud Director implement the same RP dynamism with CPU resources? How can vCloud Director 5.1 expand and shrink CPU capacity in the RPs as VMs are deployed and deleted? Enter the vCPU speed parameter.

This new parameter available in the Allocation model wizard in vCloud Director 5.1 allows the system to apply limits and reservations for the CPU subsystem at the RP level. Let's take this example: a cloud administrator sets a vCPU speed of 2Ghz when creating an Org vDC. The Org vDC has 10GHz worth of CPU capacity with a 50% guarantee. The result of this is that, when the user deploys a VM with one vCPU, the system will increment the limit of the RP (or one of the RPs in an elastic Org vDC scenario) of 2Ghz and will increment the reservation of the RP of 1Ghz.

That's how vCloud Director 5.1 achieves CPU elasticity and dynamicity with the Allocation Pool model through the vCPU speed parameter.

This is all good but customers and Service Providers started to provide feedbacks. Essentially it boils down to two things:

-

if you set a vCPU value too high you'll end up deploying a limited number of VMs / vCPUs before the system will reach the CPU resources cap of your Org vDC. In the example above (10Ghz allocated, 50% reserved, vCPU=2Ghz) it would be 5 vCPUs.

-

if you set a vCPU value too low you'll workaround the problem above but you will experience low performance initially for the first VMs you deploy. In fact, at steady state, all the many VMs will compete for the same "big" capacity in the Org vDC, but initially that capacity will be very limited as it gets incremented from 0 as VMs are added.

If, for example, the vCPU speed is set at 200Mhz, the first three VMs will deploy in a RP that has a 600Mhz limit (200Mhz x3) and a 300Mhz reservation (50% of 600Mhz). Even if these three VMs will peak at different times (which is likely) each of their individual vCPUs won't be able to use all the nominal capacity of the Org vDC (10Ghz). As the VMs being deployed increase, the perceived behavior will get closer and closer to what it was with vCloud Director 1.5 (that is all vCPUs fighting for a big bucket of resources).

Ironically the "problem" mentioned in the first bullet above existed already for the memory subsystem with vCloud Director 1.5. In other words the cloud consumer couldn't oversubscribe memory. As cloud consumers added VMs, those VMs memory reservations and limits would count against the RP reservation and limit backing the Org vDC up to a point where the system would refuse to deploy more VMs. Apparently the consensus seems to be that this is ok for memory but it's not ok for CPU. For the CPU subsystems it appears that setting a hard and predictable limit on number of vCPUs that can be deployed by a tenant is not acceptable. And all this regardless of the fact that those "infinite" vCPUs were deployed in a bucket that had limited and finite capacity anyway. Not sure how much of technical and how much of psychological there is in this discussion.

For example, I think this CPU enforcement is a good way to set an average "vCPU to core" ratio so that the tenant doesn't deploy a number of vCPUs that highly exceed the ratio that that the cloud administrator has determined to be the most optimal. Consider the example below that I am stealing from an internal discussion (not my wording but I like the way it is explained):

Assume that a tenant wants to purchase 100 GHz CPU and 100 GB memory guaranteed in the cloud with an option to burst 4X opportunistically. We need an allocation pool Org VDC of 100 GHz of CPU reservation and 100 GB of memory reservation. If the hardware backing the Provider VDC has a core frequency of 1 GHz (say), you can set the vCPU to GHz mapping to 1 GHz. Next up, you will need an estimate of how much CPU over subscription you / customer want to do. Assuming 4:1 over subscription ( = 4, same as 4X burst), you can allocate up to 400 VMs (reservation * over subscription / vCPU) with 1 vCPU from this Org VDC. This requires an allocation of 400 GHz. So, to configure the allocation pool Org VDC, you would set it up with an allocation of 400 GHz and 25% guarantee so that you get 100 GHz CPU reserved. Setting vCPU = 1 GHz will allow all the 1 vCPU VMs to consume up to 1 GHz (core frequency) and a user can provision up to 400 VMs in this Org VDC.

I think this makes sense. I like it. But the fact is that a few customers started to complain vocally about this new CPU resource management behavior in vCloud 5.1.

Enter vCloud Director 5.1.1. VMware heard this feedback loud and clear and vCloud Director 5.1.1 introduces a slight change that allows a cloud administrator to, possibly, revert the Allocation Pool model experience to a behavior that is similar to that found in vCloud Director 1.5. In particular, there is only one change that vCloud Director 5.1.1 introduces and that is:

- vCloud Director creates the RP(s) in the cluster(s) with the limit set upfront based on the Org vDC allocated size.

In essence, by pre-setting the RP limit to the nominal size of the Org vDC, the cloud administrator can now set a low vCPU speed value (as this will not be used to increment the RP limit at VM deployment time because it's already provisioned upfront). What this mean is that the very first VM will find immediately the big bucket of CPU resources it is supposed to draw from.

Note, however, that vCloud Director keeps incrementing the reservation of the RP(s) at VM deployment time based on the vCPU speed setting and the guaranteed % specified in the Org vDC creation wizard. This hasn't change from vCloud Director 5.1. The other thing that hasn't changed (based on my tests) is that the vCPU speed cannot be set below 0.26Ghz (or 260Mhz) so when I say "a low vCPU speed value", 0.26Ghz is the lowest it could get.

This means that reservation of CPU cycles of this bucket is still dynamic and directly proportional to the number of VMs deployed and calculated from the vCPU speed parameter as well as the % of reserved CPU resources (as it's in vCloud Director 5.1). This is deemed acceptable because the assumption is that most clusters are memory constrained. Not reserving CPU at the pool level isn't going to be a big problem (in most circumstances).

It is important to pay attention to the details introduced in 5.1.1. Because a RP with the total allocated capacity is created on all clusters backing a Provider vDC, this could, potentially, lead the cloud administrator to provision more resources to the tenant than what the tenant subscribed to. For example, a 10 GHz Org vDC based on the Allocation Pool model, with vCloud Director 5.1.1 would result in "n" RPs with a 10Ghz limit where "n" is the number of clusters backing the Provider vDC.

If you are using the vCPU speed parameter as it is intended to be used with the new vCloud Director 5.1 Allocation Pool model (see example above), the above behavior isn't relevant. In fact all tenants will have a bigger bucket of shared CPU capacity to draw from but will still be limited in the number of VMs they can deploy and, more importantly, will still be provided with the same reserved CPU capacity as it was with vCloud Director 5.1.

In vCloud Director 5.1.1, unwanted overprovisioning of resources may arise when both the below circumstances are true:

- the Provider vDC is backed by multiple clusters to gain elasticity

- the cloud provider set a very low vCPU speed parameter to bypass the limit in number of VMs that can be deployed

Under these circumstances, the tenants can indeed deploy a very high number of VMs (given that the number of VMs that can be deployed will tend to infinite when the vCPU speed tends to zero). This has the side effect that tenants will have access to a large bucket of overprovisioned resources due to the fact that a RP with the allocation limit is set on every cluster that is part of the Provider vDC. The second side effect is that reservation per each tenant is set to a low number (given it is still proportional to the vCPU speed and the number of VMs deployed) thus leaving different tenants with a potential high number of VMs all fighting for shared resources without proper reservations.

VMware recognizes this is not ideal but the assumption is that many customers and SPs that are using vCloud Director 1.5 are using Provider vDCs backed by a single cluster so the change introduced with vCloud Director 5.1.1 will allow them to upgrade transparently to this release and keep a vCloud Director 1.5-like behavior for the Allocation Pool model. In the future you may see additional flexibility in how to leverage these different behaviors.

This is pretty much about it. And trust me, I gave you the simplified story. There are a lot more details I am not getting into in the interest of time.

OK but what does all this mean for me?

As I am sure you are more confused than when you started reading this post... perhaps it makes sense to put a stake in the ground and underline advantages and disadvantages of the three models with vCloud Director 5.1.

The PAYG model is the most simplistic of the three. This model allows the tenant to scale without pre-configured limits. It does also allow cloud consumers to scale without any contractual agreement on resources. Sophisticated capping mechanisms now allows the cloud administrator to limit a tenant based on number of VMs, CPU and memory resources. One thing to notice is that all VMs in a PAYG are standalone entities that have specific limits and guarantees that can't be shared with the other VMs in the same tenant. So if a VM is not using all the reserved capacity available to it, that capacity cannot be used by other VMs in the tenant that are demanding more resources. The other typical disadvantage of this model is that it's based on a first-come-first-served basis. Given the cloud consumer didn't subscribe to allocated or reserved resources, the system may refuse to deploy VMs at any time depending on the status of resource consumption on the Provider vDC.

The Allocation Pool model is interesting because it allows the cloud administrator (but not the cloud consumer) to oversubscribe resources. The level of oversubscription is set by the cloud administrator at the time the Org vDC is created and the cloud consumer cannot alter those values. The most evident advantage of this model is that the cloud consumer has a set of allocated and reserved resources that has been subscribed (typically for a month). The other advantage of this model is that all VMs in the same Org vDC can share CPU and memory resources inside a bucket of resources that is dedicated (yet oversubscribed) to the tenant. The disadvantage of this model is that the cloud consumer can deploy a finite number of VMs before their total resources hits the limit of the Org vDC.

The Reservation Pool model is radically different from the above two. In this model a Resource Pool is completely dedicated and committed to the cloud consumer. This means that all oversubscription mechanisms are delegated to the tenant thus giving to the cloud consumer the flexibility to choose the oversubscription ratio of resources. The disadvantage of this model is that the cloud administrator cannot benefit from oversubscribing resources at Org vDC instantiation, given the allocated resources to the tenants are 100% reserved. This means that the cloud consumer will have to absorb the cost of this premium service from the cloud provider. Note that the Reservation Pool model (with vCloud Director 5.1) is the only one that doesn't support elasticity thus further limiting the cloud provider flexibility and architectural choices.

Massimo.

Update: On November 6th this post went through a heavy update. The previous version of the post included some misleading and erroneous information on how vCloud Director 5.1.1 works.