Infiniband Vs 10Gbit Ethernet… with an eye on virtualization

Last week I came across a hardware configuration requested ad-hoc by a customer to support their VMware VI3 setup. The hardware, an IBM System x 3950 M2 4-way (I know it's not yet generally available at the date), was configured with as many as 5 quad-port 10/100/1000 Ethernet adapters which in total would account for 5 x 4 Ethernet ports + the 2 on-board NIC's. The grand total was 22 Gigabit Ethernet ports per physical server. I thought: "there must be something wrong here".

I must admit that this is a customer I haven't worked with (closely) so I don't know where this number is exactly coming from even though I can imagine that this is due to the typical practice of physically segmenting every specific network service in a VMware VI3 environment. If you start for example to dedicate a physical interface to the Service Console, one to VMotion, one for iSCSI / NFS, one for the dedicated Backup network, one for each of the production networks and you multiply everything by 2 (for redundancy reasons) you easily get to a double digit number of network adapters even for the most basic setup. Consider that this is not a technical limitation since there are a number of technologies (such as VLANs and VMware Port Groups) that could allow you to logically segment all networks above. In the final analysis it is typically a design decision based on best practices and customer's internal policies/politics. I don't want to get into the design principles now but on average what happens is that the bigger the scope of the project is (that is... the bigger the customer is) the more stringent these policies/politics are. So it's not uncommon to see many Small and Medium Business customers running with fewer adapters and Enterprise customers running with many more NICs (all the way to 22!).

No matter what your opinion is on the topic (in terms of how to properly design a VI3 network layout) but I am sure you'll agree with me that the requirement for a high number of network adapters is primarily due to segmentation issues rather than raw performance issues. Let me put it in another way: if you need to have 22 NIC's configured in your host it is not (usually) because you need 22 Gbit/second of (nominal) bandwidth out of your 4-way server. Most likely it is because you need to segment your network layout in order to have 22 x RJ45 physical copper ports to plug somewhere. And by the way you could probably do with 10/100 Mbit/sec adapters but that is neither "cool" nor "practical". And if you instead need that many ports for raw performance issues... then you should triple-think if you want to do that on top of a hypervisor these days since it's going to add so much overhead that it's going to be like driving a 22 cylinders Ferrari through Manhattan: the bottleneck is not going to be in the number of cylinders!

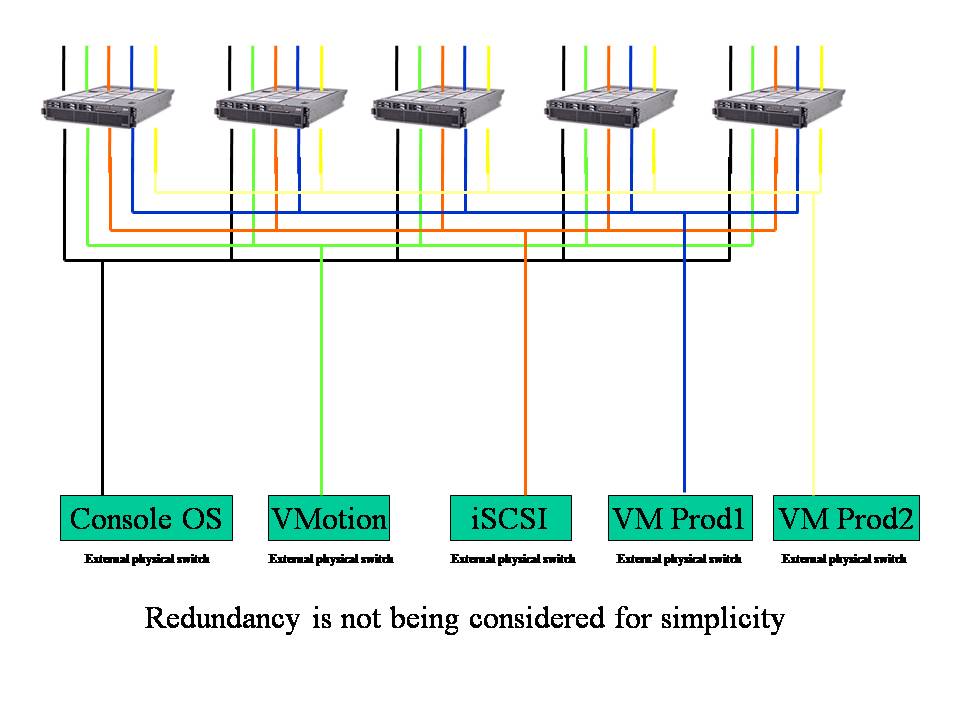

Having this said 22 NIC's is a bit of an extreme number. Usually the number of "suggested" Ethernet ports might vary between 4-6 and 12-14. For those of you that do not have a solid background on the matter the layout would look something like this:

As you could depict the number of network connections adds up complexity to the physical design of the cluster and the datacenter. Consider also that the layout in the picture above does not even take into account redundancy so, even though a bit of optimization can be achieved, you should multiply these connections by a factor of two in general. This is how and why you can easily require 6 or 8 or 10 Ethernet connections per each virtualized host. As you can imagine this is not a "bandwidth" problem.

And this reminds me of a panel I attended at VMworld regarding the future of I/O technologies which turned immediately into a one hour debate regarding "will the future of I/O be 10Gbit Ethernet or will it be Infiniband?". That was an interesting session. I am not going to talk in details about what Infiniband is but in a nutshell it is a technology that allows you to collapse on a single transport media both Ethernet and fibre channel protocols. Its other major characteristics are:

- high bandwidth

- low latency

- "I/O virtualization"

It sounds good at first but one big drawback of this technology is that it would require a complete new cabling and datacenter architecture (i.e. switches etc etc) and for those customers that have heavily invested in Ethernet and Fibre Channel architectures (and know-how) this is not a viable option.

Here the discussion might become very complex as people working on Ethernet technology would argue that Ethernet might be considered the future base transport for both storage and network (i.e. with iSCSI) whereas Infiniband people would argue instead that it is Infiniband that has been designed from the ground up to be the Datacenter interconnect technology etc etc. I want however to keep this discussion very simple and only focus on the current "network" problem for most of the customers using or approaching virtualization technologies that require a certain number (typically high) of network interfaces.

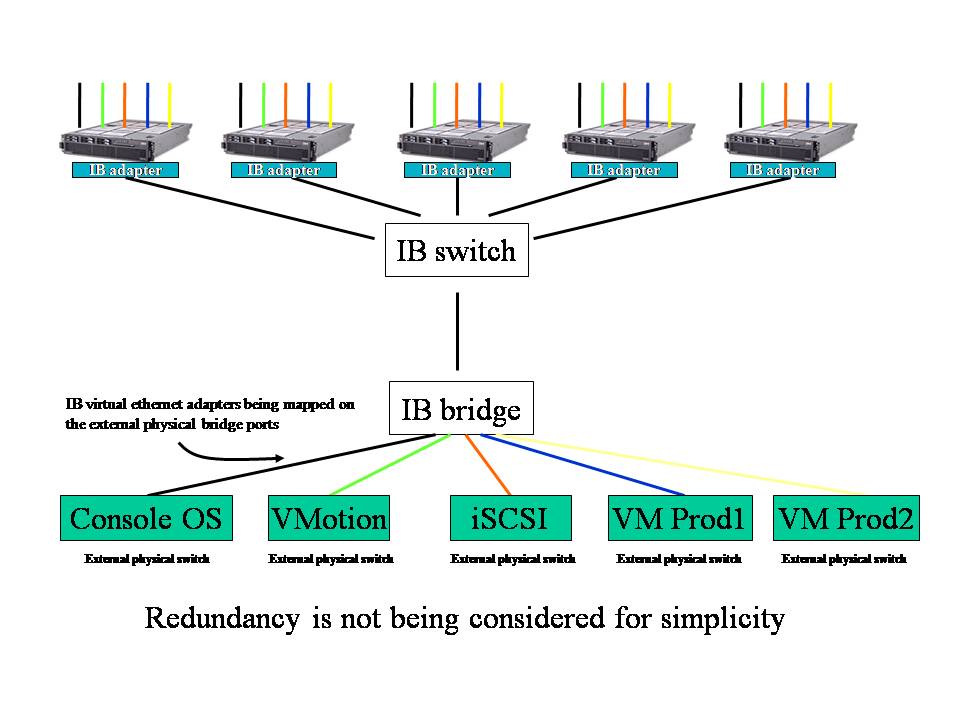

I must say upfront that I have not any vested interest in supporting one technology over the other but I think that during that VMworld session the prominent "10 Gbit Ethernet" gurus took the problem from a wrong perspective: "10 Gbit Ethernet is 10 times faster than 1 Gbit Ethernet so this means less cable and less complexity". If that is what the technology has to offer then I don't think this is going to solve our problems because in a couple of years I would be using 22 (or whatever the number is) x 10 Gbit adapters in a 4-socket system ... just because at that point 22 x 1Gb adapters will be either no longer "cool" nor "practical". Remember it is not (usually) a bandwidth problem but rather a physical segmentation issue. Which brings me to a few aspects of Infiniband that the Infiniband gurus did not leverage too much, in my opinion, during that panel and that are going to make this technology (potentially) more appealing as it doesn't just use brute performance force to provide added value. There are two major points that are worth considering in this discussion: the first is that VMware is supposedly going to support Infiniband technologies in the upcoming VI3.5 due in a few weeks/months (this is no longer a secret). The second important aspect to take into account is the fact that Infiniband technology can be "bridged" into legacy datacenter I/O architectures such as standard Ethernet and Fibre Channel devices. No one would want to rip and replace its datacenter network infrastructure with a brand new technology when Ethernet is doing very well at what it is supposed to do. The problem is not Ethernet per se; the problem is that, because of the scenarios we are implementing we need so many Ethernet NIC's inside a single server that this technology alone is becoming not practical. Assuming you have a VI3 datacenter with as many as 20 ESX nodes and each of these nodes have, say for example, 12 Ethernet NICs... wouldn't it be cool to "centralize" this complexity of 12 NIC's into a sort of concentrator and allow the 20 nodes to talk to each other in a much more simplified and efficient way (yet allowing them to talk to the legacy network)? Well this scenario is possible using Infiniband bridges that connect the Infiniband (IB) network to the legacy network. Confused? I can imagine... but a picture should explain this much better:

Basically, installing a single Infiniband (IB) host adapter into each server, you can create a number of "virtual ports" that would map into the IB switches and in turns into the IB-Bridges to connect to your legacy Ethernet infrastructure. If you have to have 22 x RJ45 Ethernet cables because your internal policies / politics require you to connect to physically segmented networks.... that's fine, you can do that, but instead of duplicating 22 x RJ45 Ethernet NIC's per each server you have centralized that onto the IB-Bridge device. It goes without saying that this technology allows you to "expose" the same networks you plug into the IB-Bridges all the way into the ESX Servers using a mix of virtual IB Ethernet adapters and VMware Port Groups. After all what you want to do is:

- Creating something that is transparent to and compliant with your network policies / politics

- Continuing to use your VI3 hosts as if they had the typical 8, 10 or 12 NICs connected to them (in terms of network visibility)

- Yet getting rid of these physical 8, 10 or 12 NICs per each server

I was surprised that the discussion in that panel was more geared towards raw performance rather than other features and scenarios like this because I really think that, from a pure theoretical perspective, Infiniband would have more to say than 10Gbit Ethernet specifically for relatively big VI3 deployments. It's also interesting to notice that technically Infiniband allows you to carry Fibre Channel traffic too so, without adding any new adapter on the server, you can add a Fibre Channel IB-Bridge to the picture and let the ESX hosts connect transparently to legacy FC Storage Servers through the same IB host adapter.

Having this said I have learned across the years that it's not always the best technology and solution to win so chances are that we will never see the massive adoption of IB technologies (and its partitioning/virtualization features) that will make it a "business as usual" type of technology for the "average Joe administrator".

Time will tell.

Massimo.