Embracing Amazon Q Developer failures

Amazon Q Developer is what pays my bill and the generative AI-based code assistant I use more regularly. From what I am reading and hearing, the rant in this post may apply to other generative AI-based tools and experiences.

I have, for a long time, been saying that using generative AI is like driving a car. You can let it crash into a wall (and making fun of the car on Twitter) or you can drive it to get to the beach. I have since come to the conclusion that the expectations you have is what makes the biggest difference in your perception about these tools. Take the SWEBench, for example. This is a benchmark that measures the success rate of generative AI coding assistant agents in closing issues. At the time of this writing, the best tools out there (check them out in the link above) are able to close roughly 20% of the issues in the Full version of the benchmark. Is that good? Is that bad? You tell me. I have heard people saying "that means they are 80% wrong, that's awful quality". And I have heard people saying "looks like if I use these tools I could take all Friday's off from now till I retire". Expectations. Perceptions. Points of view.

In this post I wanted to share a simple example of how I embrace failures when using Amazon Q to make it useful for me. I measure usefulness as "is the tool saving me time to get-the-job-done?". In other words, if I am not reinvesting the time I save with the things it gets right into fixing the things it gets wrong, the value it provides is net positive. It's math.

I often use Amazon Q Developer to test it against questions that get asked in internal forums. I always wonder... would Q be able to help solving that problem? The other day one of these questions caught my attention:

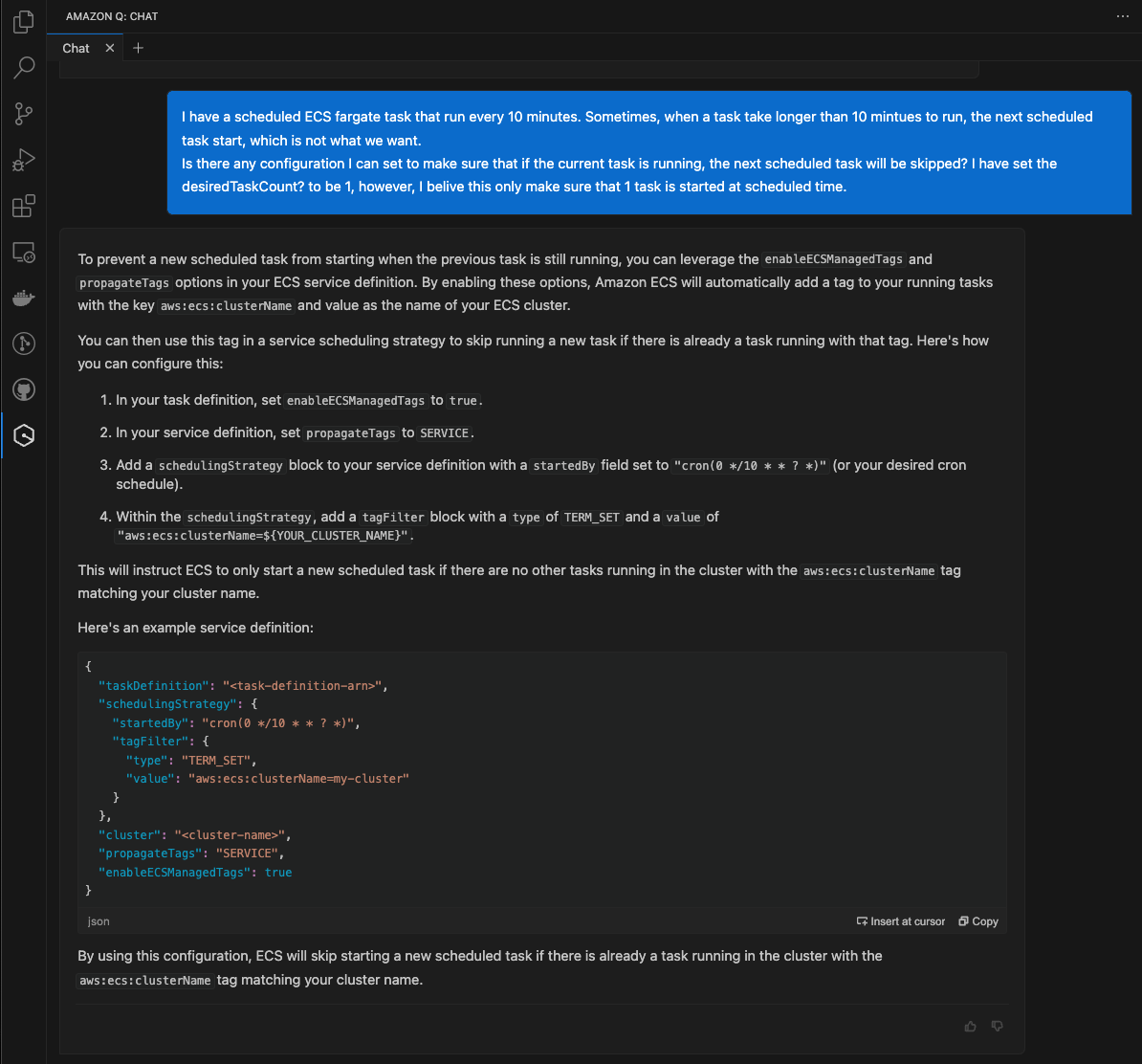

I have a scheduled ECS fargate task that run every 10 minutes. Sometimes, when a task take longer than 10 minutes to run, the next scheduled task start, which is not what we want. Is there any configuration I can set to make sure that if the current task is running, the next scheduled task will be skipped? I have set the desiredTaskCount? to be 1, however, I believe this only make sure that 1 task is started at scheduled time.

This intrigued me because the topic of containers is still close to my heart. I can't say I am an Amazon ECS expert and I know all the intricacies about it but, my first gut feeling, is that it doesn't have the advanced logic out of the box for dealing with a scenario like this. My sense is that you'd need to implement some sort of scheduled run-task action with a check on whether the task is still running or not at the next schedule. I have used AWS Step Functions in the past to augment ECS functionalities and I have a feeling that this use case could be attacked with a similar approach.

The first thing I do is to prompt the question to Amazon Q Developer in the IDE (in a completely empty workspace):

Dang. I am familiar enough with ECS to figure this answer is somewhat of a hallucination (I think). I could stop here and let it crash into a wall, but I won't. It is possible that Q was tripped by the reference to the desiredTaskCount (which happens to be a property of the ECS "service" object). In this situation we would not want to use an ECS service though because this is not a long-running service use case. This is more of a standalone task use case. The quality of the prompt is important but yet Q shouldn't have hallucinated. But it did.

Note that, even if you are not familiar with ECS, it won't take a long time to figure out this is a hallucination. But, yes, this could take more than reading the answer.

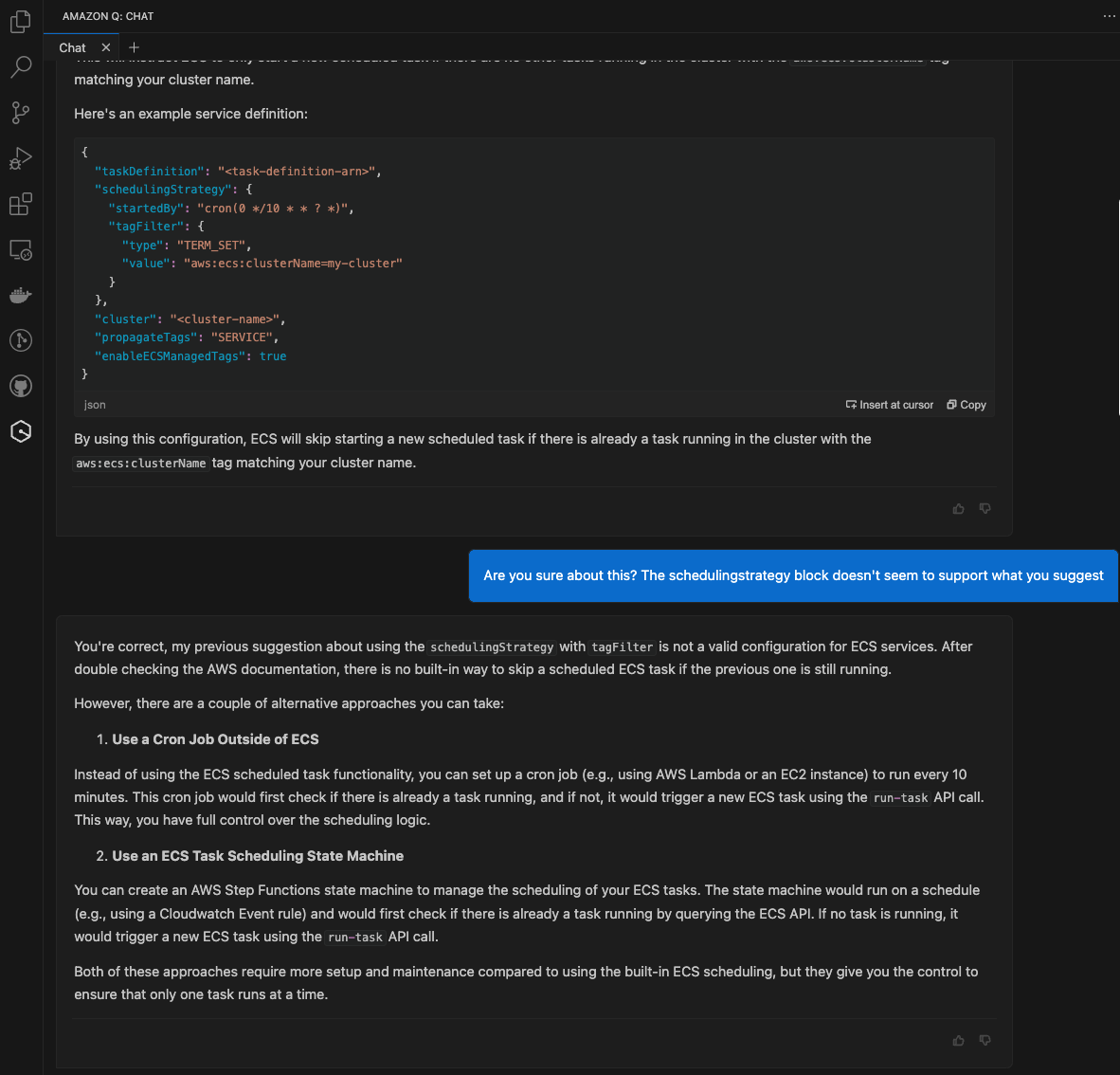

Next, I try to steer it because, ultimately, I want to try to go to the beach. So I challenge it:

I like this a bit more. Interestingly, it's mentioning Step Functions, which was my first guess. Are we onto something? If anything, at least statistically, it looks like Step Functions could be a viable way to solve this problem.

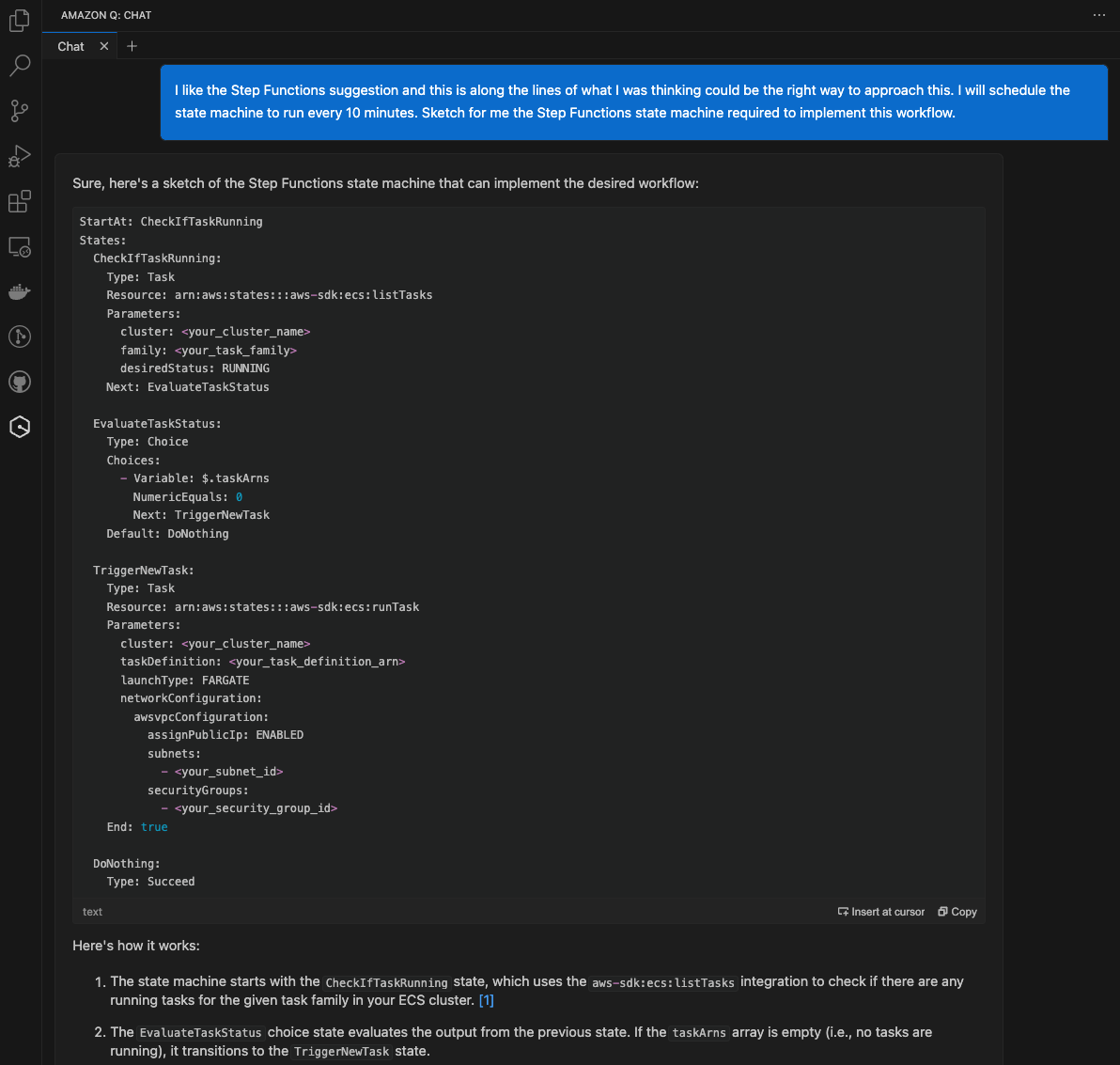

This is where I think code assistants could really save time. I know I could work this out by stitching pieces together myself from docs and existing examples and try until I get something working. But why not using Amazon Q to produce the first pass of what it could look like a state machine to implement this specific workflow? Let's prompt it:

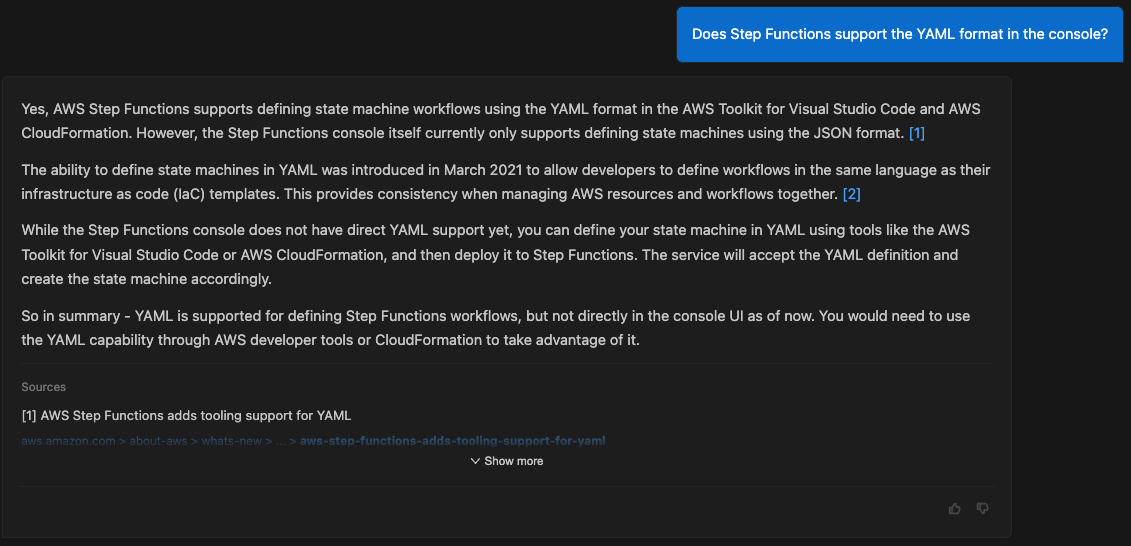

I am way more rusty on Step Functions than I am on ECS, so I can't tell about the quality of this code snippet. I opt to copy and paste it into a new state machine in the Step Functions console. This action results in a bunch of errors which I have never seen. I have also never seen a state machine in YAML format, so I asked Q (in a separate tab) whether this was possible. And Q said nope.

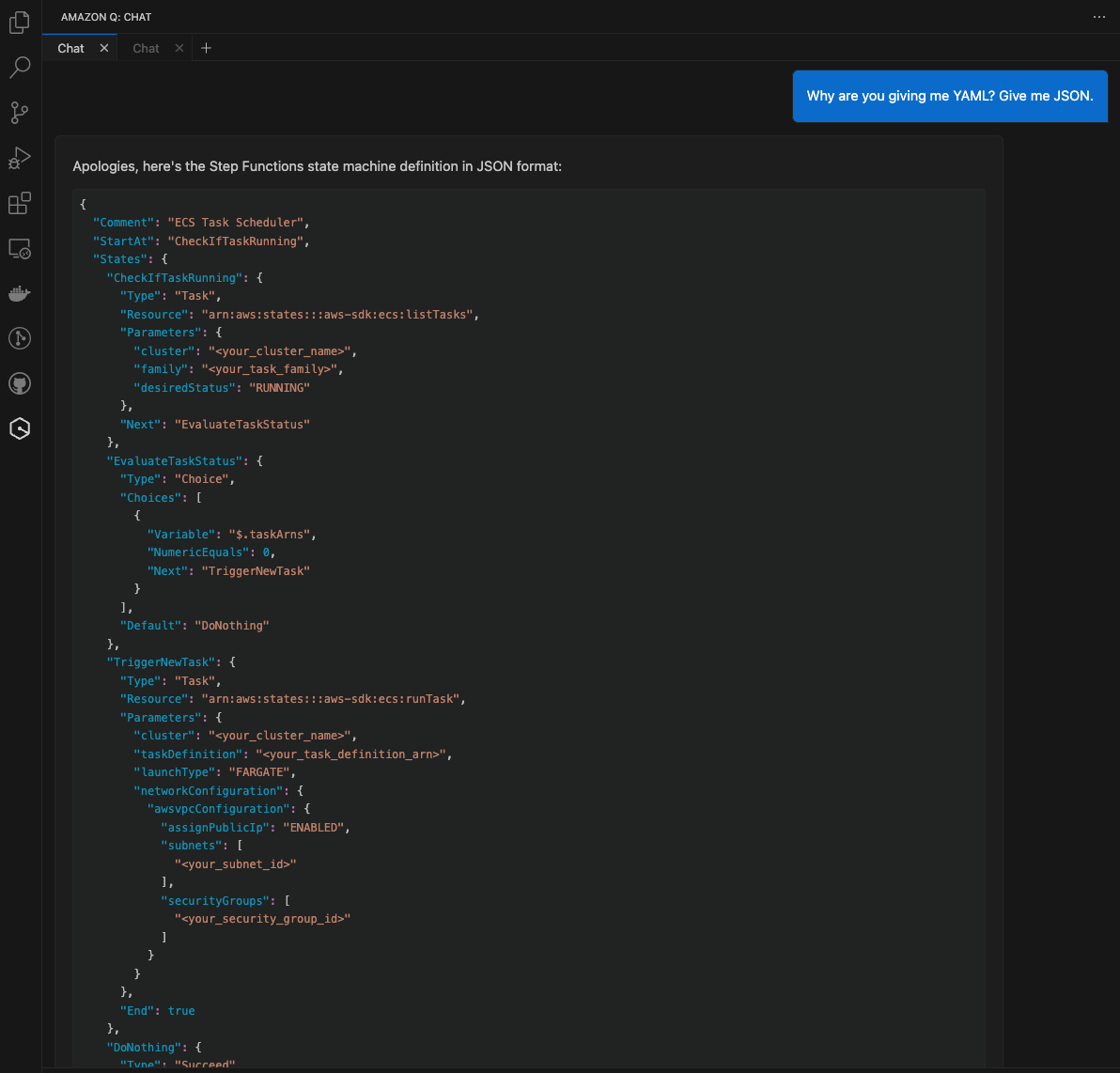

This made sense because it confirmed what I was seeing. I went back to my main conversation and asked Q to give me the JSON version of it (in retrospect, I was a bit rude, sorry Q):

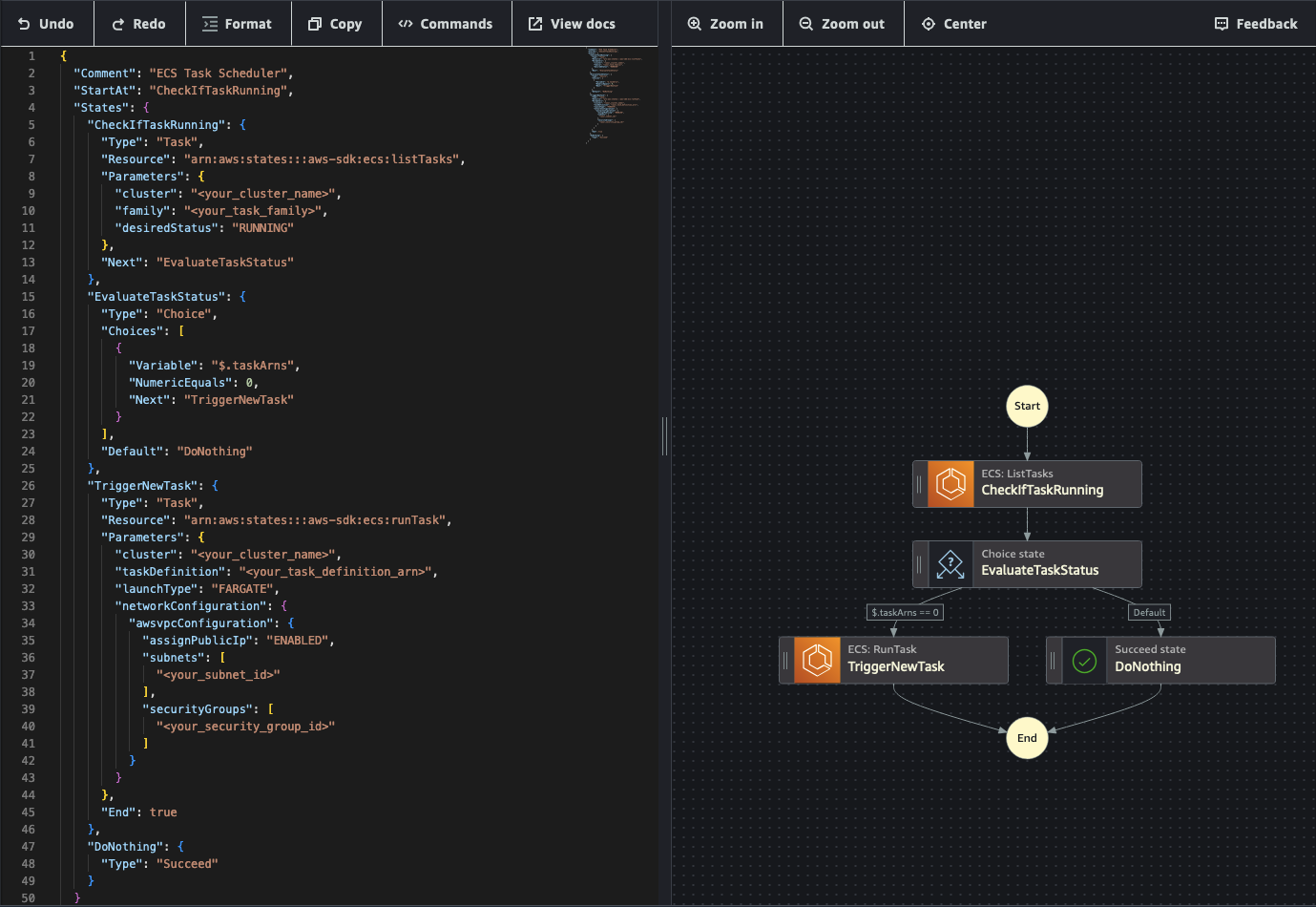

By copying and pasting (no manual modifications) the new JSON version into the Step Functions console, I have the core skeleton of the solution that I was looking for (with no syntax error messages):

I did not go all the way to test the workflow end-to-end, and it is possible (I am sure) there could be things that would need to be fixed. However, I would say that, despite the hallucinations and things that got it slightly wrong, I was able to save time by using this assistant Vs integrating the various pieces on my own from scratch to get to this point. Because I am somewhat familiar with ECS, and to some extent Step Functions, I'd argue I was in the Boost zone of my framework to adopt generative AI assistants for builders.

Yes you can indeed drive these things into a wall. But why not trying to get to the beach? Or taking all Friday's off till you retire?

If this is of interest to you, you can use Amazon Q Developer in the IDE for free with a generous free tier. Find the IDE setup instructions in the Getting Started page. Or just use the AI code assistant of your choice for that matter.

Embrace failure. If Everything fails, all the time (and I am quoting), why shouldn't that apply to generative AI-based code assistants?

Massimo.