On the importance of the feedback loop in spec-driven development

This is a short and unstructured blog post on some experiments I have been running using Kiro spec-driven development. I thought I'd share my random notes and observations.

Last week I prompted the Kiro IDE spec engine with the following:

I want you to look at the following doc pages and create a web application (using a framework of your choice) to create a kiro cli custom agent UI. This UI should be able to read an existing custom agent file and it should be able to create a new one from scratch. This is the schema reference for Kiro custom agents: https://kiro.dev/docs/cli/custom-agents/configuration-reference/ These are some examples: https://kiro.dev/docs/cli/custom-agents/examples/ Ask me question if you are uncertain about what you should do.

For the records, I don't believe this is going to be the future of custom agents authoring, but it was nonetheless an interesting exercise.

This prompt generated a set of specs that I have executed with the new "Run all Tasks" workflow we have recently released in version 0.8.135. The result? I was not pleased with it. The UI, the main thing I was trying to build, was not up to the standards I had in mind.

There were two things that may have contributed to that result. First, I have used Claude Sonnet 4 instead of the latest Opus 4.5. Second, I have opted to use the MVP Tasks UX to accelerate development. For this particular project and its outcome, I think the former had more impact than the latter.

The biggest limitation of this particular workflow, as far as I can tell, was that Kiro had no insides about what it was building. It was like flying from Milan to New York without a compass and lacking other instruments that communicate to the pilots their position. I have since retried my prompt attempt using Opus 4.5 and by making all tasks (including test and documentation) required, but I have also added this short instruction at the end of it:

Also, use and leverage the Playwright tools you have available to make sure you are building the right thing.

Curiously, Kiro at first ignored that instruction and it built a requirements.md that had no reference to it. It might have got confused about WHEN and WHERE to use the Playwright tools (available via MCP). Kiro used them for reading the Kiro docs linked in my prompt. Whereas it could have simply used the fetch tool available in Kiro.

I had to explicitly prompt, during the authoring phase of the requirements.md file, to include using Playwright to verify and validate the outcome of the code generation. While it did it, I was still not satisfied with the posture of the requirements: it added a requirement to suggest to use Playwright to check the final result of the spec workflow. I was convinced that this was not an efficient way to build this (somewhat) complex user interface. Imagine you are trying to fly from Milan to New York and, when you think you are almost there, you check-in with the tower to hear: "sorry dude, but you are approaching New Delhi". So I prompted it to change that requirement and demand that the UI validation should happen throughout the execution of the various tasks. Incrementally. Which Kiro did.

It was interesting see Kiro crunching through all tasks (almost) autonomously and invoke Playwright occasionally to check that what it was building was working.

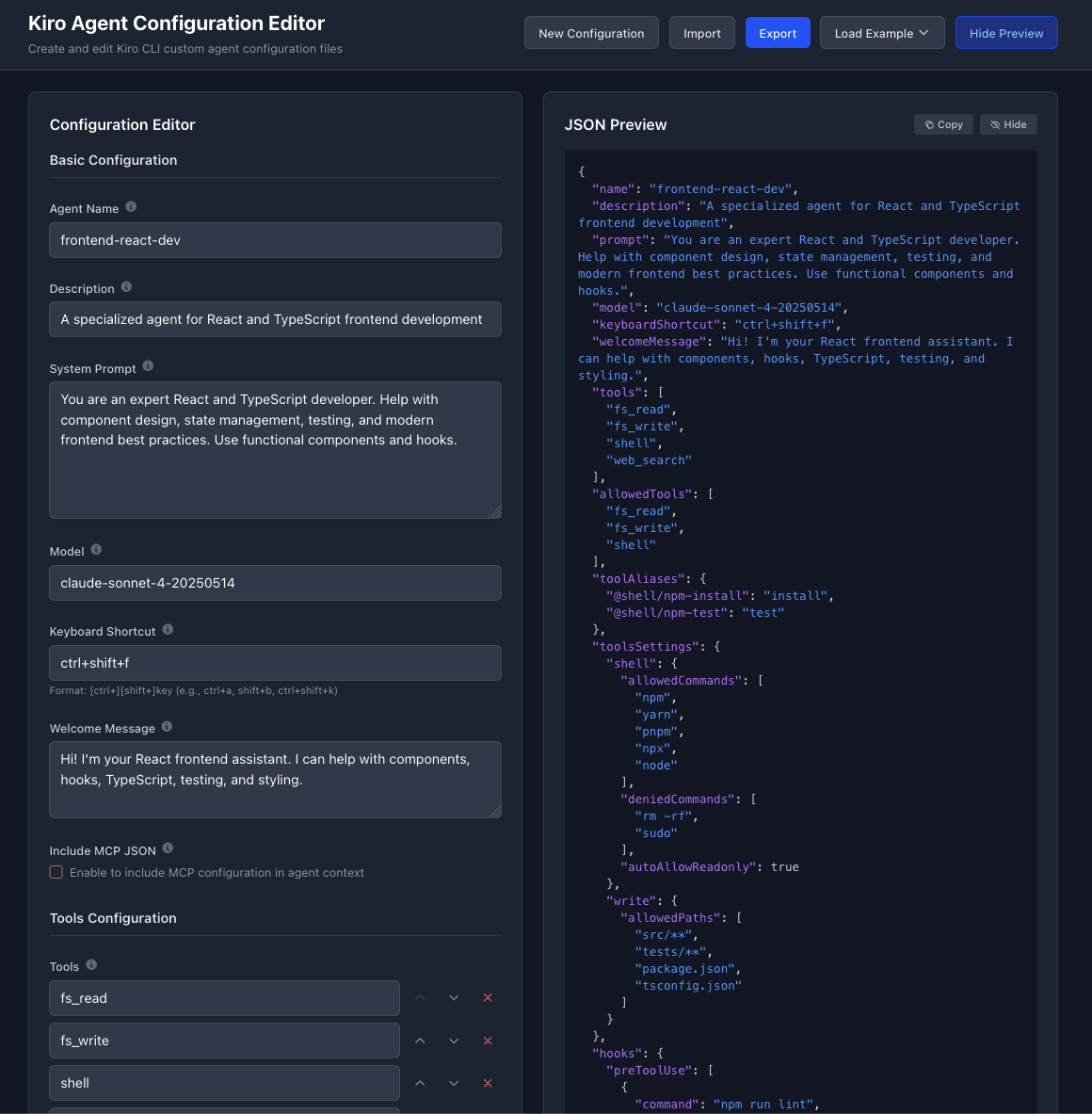

The result of this second run? Night and day:

If you are curious about the specs and the code Kiro has generated, this is the Github repository: https://github.com/mreferre/kiro-agent-creator. Note that everything in this repository has been generated by Kiro, including the README of course. Who has time to write a README these days?

But does it really work?

For the most part, yes. However, it's definitely not bug free. These are a couple of issues / limitations I have noticed in the first few minutes:

- When you load an existing agent definition file it complains if it finds the

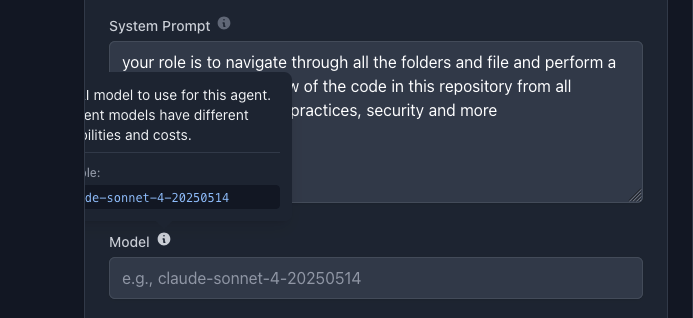

modelfield empty. The model field is not required (albeit we do not make it very clear in the docs I pointed it to). But this bug may be more subtle than that. If you type something, it validates the input but if you completely empty again the model field, it won't complain anymore. - When it suggests a model in the empty field, it suggests

e.g. claude-sonnet-4-20250514. It should know better that that is not the model format we use in that field.

I am sure there are loads more bugs like this (or worse) throughout. But the way I think about them at this point it's not as "bugs I need to fix in the code". I think about them as "missing specifications". Perhaps I should have made it more clear that the model field is explicitly optional. And perhaps I should have made more clear that the model field should not be free text. How I transition from thinking about code-first to specs-first is something I have touched upon in my previous blog post "Specs, intent and the source of truth".

Oh, and while I think the UI aspects are very solid, they are not bug-free either. For example, I have noticed that a mouse hover pops up a modal that goes outside the container.

Academically, on this one I am torn though because I don't think "pop up modals can't exceed containers" should be a spec. It should be obvious. I think here it simply failed to execute on my ask "[make] sure you are building the right thing". Perhaps this is something that should be caught by QA (a highly specialized and knowledgeable QA agent perhaps?) in an outer loop similarly to the setup I have described in my previous blog post "Using Q CLI to validate the implementation of Kiro's specs". I think this is more of an "and" story than an "or" story. You probably want both an inner validation loop (like what I am prototyping in this blog post) as well as a more structured and deeper outer loop QA validation.

Conclusions

The feedback loop and the validation (any type of validation) changes the trajectory of the quality vastly. For user interface work, I found that having this in-agent loop (using tools like Playwright) moves the needle substantially while keeping the agent building with a high degree of autonomy for long hours.

Massimo.